SAM 3: Segment Anything with Concepts

Nicolas Carion, Laura Gustafson, Yuan-Ting Hu, Shoubhik Debnath, Ronghang Hu, Didac Suris, Chaitanya Ryali, Kalyan Vasudev Alwala, Haitham Khedr, Andrew Huang, Jie Lei, Tengyu Ma, Baishan Guo, Arpit Kalla, Markus Marks, Joseph Greer, Meng Wang, Peize Sun, Roman Rädle, Triantafyllos Afouras, Effrosyni Mavroudi, Katherine Xu, Tsung-Han Wu, Yu Zhou, Liliane Momeni, Rishi Hazra, Shuangrui Ding, Sagar Vaze, Francois Porcher, Feng Li, Siyuan Li, Aishwarya Kamath, Ho Kei Cheng, Piotr Dollár, Nikhila Ravi, Kate Saenko, Pengchuan Zhang, Christoph Feichtenhofer

Meta Superintelligence Labs

core contributor

intern

project lead

order is random within groups

intern

project lead

order is random within groups

Abstract

We present Segment Anything Model (SAM) 3, a unified model that detects, segments, and tracks objects in images and videos based on concept prompts, which we define as either short noun phrases (e.g., ``yellow school bus''), image exemplars, or a combination of both. Promptable Concept Segmentation (PCS) takes such prompts and returns segmentation masks and unique identities for all matching object instances. To advance PCS, we build a scalable data engine that produces a high-quality dataset with 4M unique concept labels, including hard negatives, across images and videos. Our model consists of an image-level detector and a memory-based video tracker that share a single backbone. Recognition and localization are decoupled with a presence head, which boosts detection accuracy. SAM 3 doubles the accuracy of existing systems in both image and video PCS, and improves previous SAM capabilities on visual segmentation tasks. We open source SAM 3 along with our new Segment Anything with Concepts (SA-Co) benchmark for promptable concept segmentation.

Demo: https://segment-anything.com

Code: https://github.com/facebookresearch/sam3

Website: https://ai.meta.com/sam3

Code: https://github.com/facebookresearch/sam3

Website: https://ai.meta.com/sam3

1. Introduction

The ability to find and segment anything in a visual scene is foundational for multimodal AI, powering applications in robotics, content creation, augmented reality, data annotation, and broader sciences. The SAM series ([1,2]) introduced the promptable segmentation task for images and videos, focusing on Promptable Visual Segmentation (PVS) with points, boxes or masks to segment a single object per prompt. While these methods achieved a breakthrough, they did not address the general task of finding and segmenting all instances of a concept appearing anywhere in the input (e.g., all "cats" in a video).

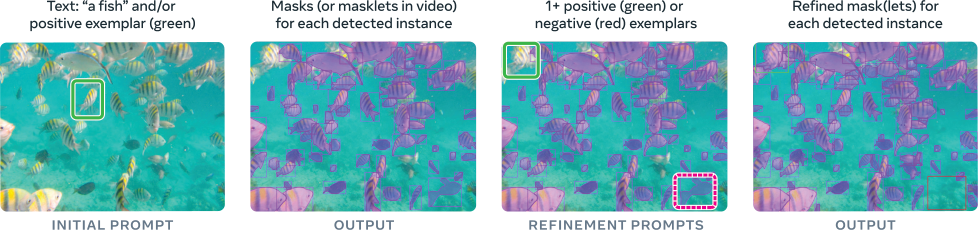

To fill this gap, we present SAM 3, a model that achieves a step change in promptable segmentation in images and videos, improving PVS relative to SAM 2 and setting a new standard for Promptable Concept Segmentation (PCS). We formalize the PCS task (§ 2) as taking text and/or image exemplars as input, and predicting instance and semantic masks for every single object matching the concept, while preserving object identities across video frames (see Figure 1). To focus on recognizing atomic visual concepts, we constrain text to simple noun phrases (NPs) such as "red apple" or "striped cat". While SAM 3 is not designed for long referring expressions or queries requiring reasoning, we show that it can be straightforwardly combined with a Multimodal Large Language Model (MLLM) to handle more complex language prompts. Consistent with previous SAM versions, SAM 3 is fully interactive, allowing users to resolve ambiguities by adding refinement prompts to guide the model towards their intended output.

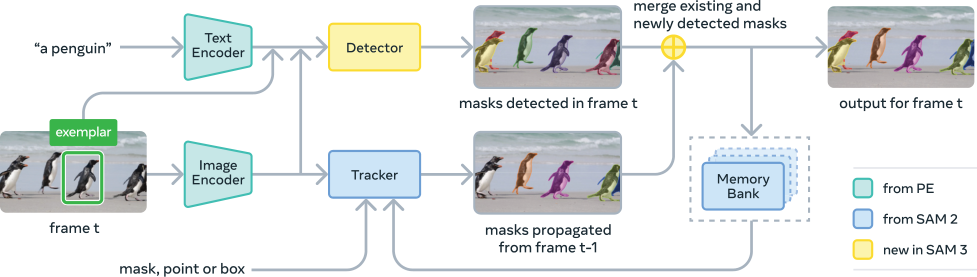

Our model (§ 3) consists of a detector and a tracker that share a vision encoder ([4]). The detector is a DETR-based ([5]) model conditioned on text, geometry, and image exemplars. To address the challenge of open-vocabulary concept detection, we introduce a separate presence head to decouple recognition and localization, which is especially effective when training with challenging negative phrases. The tracker inherits the SAM 2 transformer encoder-decoder architecture, supporting video segmentation and interactive refinement. The decoupled design for detection and tracking avoids task conflict, as the detector needs to be identity agnostic, while the tracker's main objective is to separate identities in the video.

To unlock major performance gains, we build a human- and model-in-the-loop data engine (§ 4) that annotates a large and diverse training dataset. We innovate upon prior data engines in three key ways: (i) media curation: we curate more diverse media domains than past approaches that rely on homogeneous web sources, (ii) label curation: we significantly increase label diversity and difficulty by leveraging an ontology and multimodal LLMs as "AI annotators" to generate noun phrases and hard negatives, (iii) label verification: we double annotation throughput by fine-tuning MLLMs to be effective ``AI verifiers” that achieve near-human accuracy.

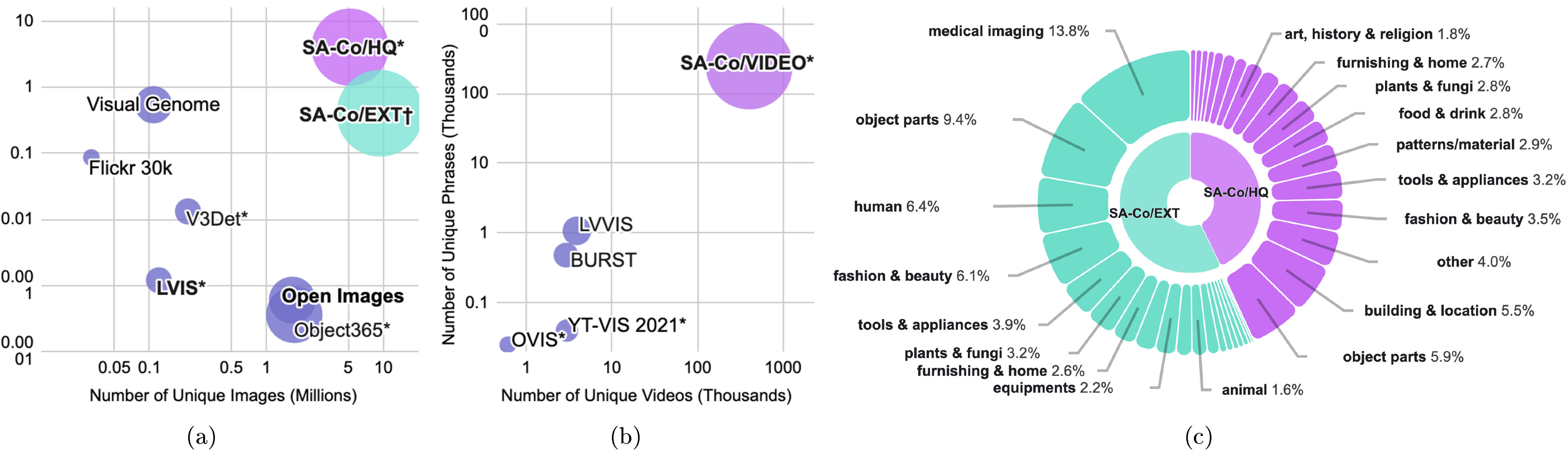

Starting from noisy media-phrase-mask pseudo-labels, our data engine checks mask quality and exhaustivity using both human and AI verifiers, filtering out correctly labeled examples and identifying challenging error cases. Human annotators then focus on fixing these errors by manually correcting masks. This enables us to annotate high-quality training data with 4M unique phrases and 52M masks, and a synthetic dataset with 38M phrases and 1.4B masks. We additionally create the Segment Anything with Concepts ({SA-Co}) benchmark for PCS (§ 5) containing 207K unique concepts with exhaustive masks in 120K images and 1.7K videos, more concepts than existing benchmarks.

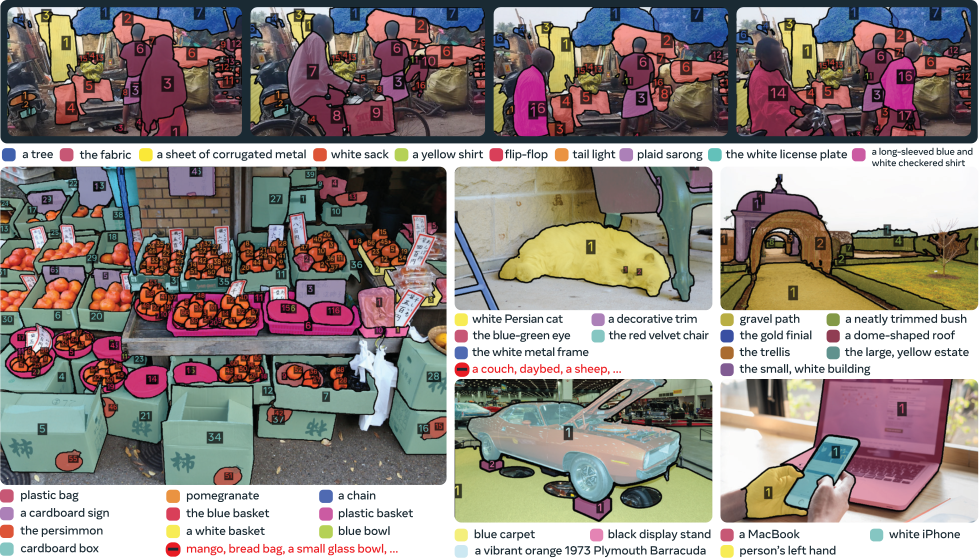

Our experiments (§ 6) show that SAM 3 sets a new state-of-the-art in promptable segmentation, e.g., reaching a zero-shot mask AP of 48.8 on LVIS vs. the current best of 38.5, surpassing baselines on our new SA-Co benchmark by at least (see examples in Figure 2), and improving upon SAM 2 on visual prompts. Ablations (§ A) verify that the choice of backbone, novel presence head, and adding hard negatives all boost results, and establish scaling laws on the PCS task for both our high-quality and synthetic datasets. We open-source the SA-Co benchmark and release the SAM 3 checkpoints and inference code. On an H200 GPU, SAM 3 runs in 30 ms for a single image with 100+ detected objects. In video, the inference latency scales with the number of objects, sustaining near real-time performance for concurrent objects. We review related work in § 7; next, we dive into the task.

2. Promptable Concept Segmentation (PCS)

We define the Promptable Concept Segmentation task as follows: given an image or short video ( 30 secs), detect, segment and track all instances of a visual concept specified by a short text phrase, image exemplars, or a combination of both. We restrict concepts to those defined by simple noun phrases (NPs) consisting of a noun and optional modifiers. Noun-phrase prompts (when provided) are global to all frames of the image/video, while image exemplars can be provided on individual frames as positive or negative bounding boxes to iteratively refine the target masks (see Figure 3).

All prompts must be consistent in their category definition, or the model's behavior is undefined; e.g., "fish" cannot be refined with subsequent exemplar prompts of just the tail; instead the text prompt should be updated. Exemplar prompts are particularly useful when the model initially misses some instances, or when the concept is rare.

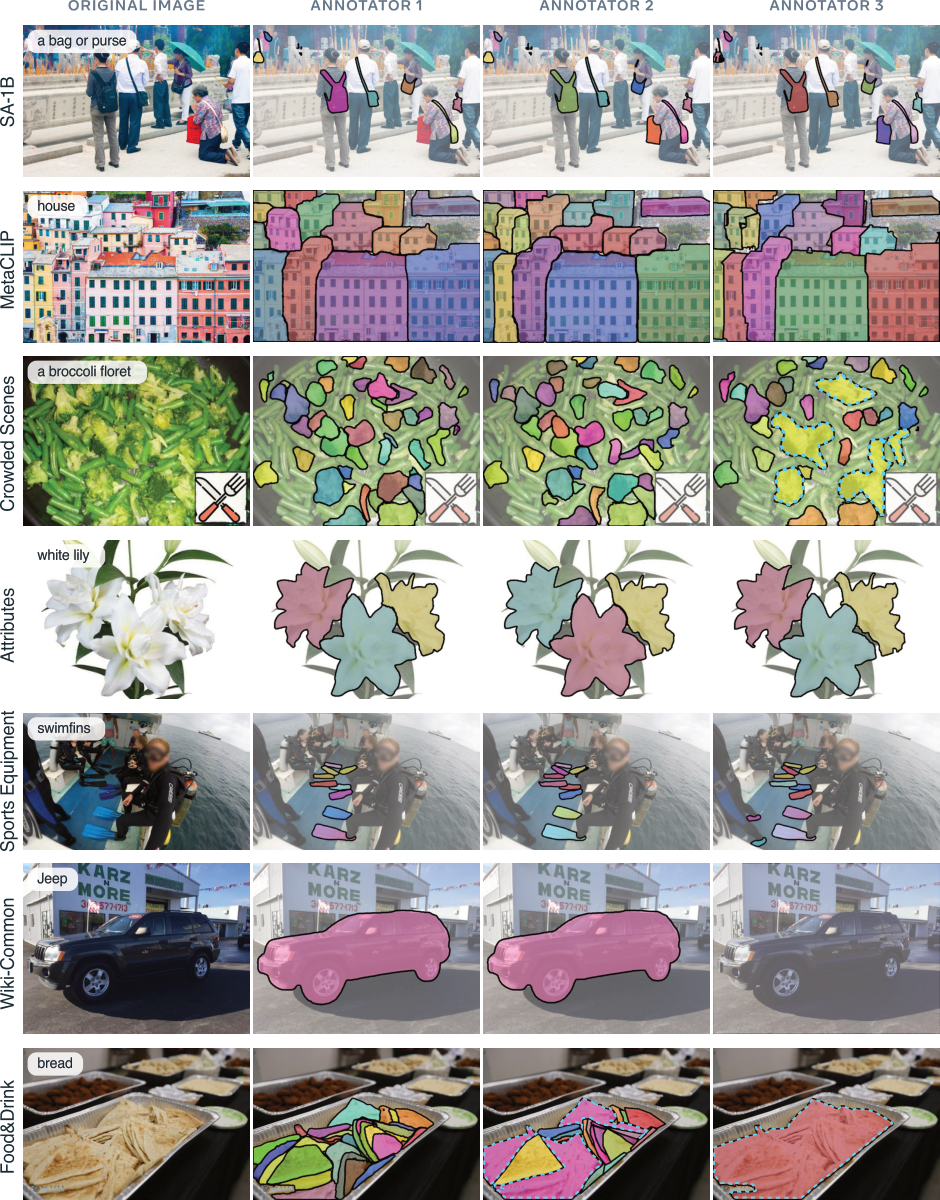

Our vocabulary includes any simple noun phrase groundable in a visual scene, which makes the task intrinsically ambiguous. There can be multiple interpretations of phrases arising from polysemy (“mouse” device vs. animal), subjective descriptors (“cozy”, “large”), vague or context-dependent phrases that may not even be groundable (“brand identity”), boundary ambiguity (whether 'mirror' includes the frame) and factors such as occlusion and blur that obscure the extent of the object. While similar issues appear in large closed-vocabulary corpora (e.g., LVIS ([6])), they are alleviated by carefully curating the vocabulary and setting a clear definition of all the classes of interest. We address the ambiguity problem by collecting test annotations from three experts, adapting the evaluation protocol to allow multiple valid interpretations (§ E.3), designing the data pipeline/guidelines to minimize ambiguity in annotation, and an ambiguity module in the model (§ C.2).

3. Model

SAM 3 is a generalization of SAM 2, supporting the new PCS task (§ 2) as well as the PVS task. It takes concept prompts (simple noun phrases, image exemplars) or visual prompts (points, boxes, masks) to define the objects to be (individually) segmented spatio-temporally. Image exemplars and visual prompts can be iteratively added on individual frames to refine the target masks---false positive and false negative objects can be removed or added respectively using image exemplars and an individual mask(let) can be refined using PVS in the style of SAM 2. Our architecture is broadly based on the SAM and (M)DETR ([5,7]) series. Figure 4 shows the SAM 3 architecture, consisting of a dual encoder-decoder transformer---a detector for image-level capabilities---which is used in combination with a tracker and memory for video. The detector and tracker ingest vision-language inputs from an aligned Perception Encoder (PE) backbone ([4]). We present an overview below, see § C for details.

3.1.1.1 Detector Architecture

The architecture of the detector follows the general DETR paradigm. The image and text prompt are first encoded by PE and image exemplars, if present, are encoded by an exemplar encoder. We refer to the image exemplar tokens and text tokens jointly as "prompt tokens". The fusion encoder then accepts the unconditioned embeddings from the image encoder and conditions them by cross-attending to the prompt tokens. The fusion is followed by a DETR-like decoder, where learned object queries cross-attend to the conditioned image embeddings from the fusion encoder.

Each decoder layer predicts a classification logit for each object query (in our case, a binary label of whether the object corresponds to the prompt), and a delta from the bounding box predicted by the previous level, following [8]. We use box-region-positional bias ([9]) to help focalize the attention on each object, but unlike recent DETR models, we stick to vanilla attention. During training, we adopt dual supervision from DAC-DETR ([10]), and the Align loss ([11]). The mask head is adapted from MaskFormer ([12]). In addition, we also have a semantic segmentation head, which predicts a binary label for every pixel in the image, indicating whether or not it corresponds to the prompt. See § C for details.

Presence Token

It can be difficult for each of the proposal queries to both recognize (what) and localize (where) an object in the image/frame. For the recognition component, contextual cues from the entire image are important. However, forcing proposal queries to understand the global context can be counterproductive, as it conflicts with the inherently local nature of the localization objective. We decouple the recognition and localization steps by introducing a learned global presence token. This token is solely responsible for predicting whether the target concept in the form of a noun phrase (NP) is present in the image/frame, i.e. . Each proposal query only needs to solve the localization problem . The final score for each proposal query is the product of its own score and the presence score.

Image Exemplars and Interactivity

SAM 3 supports image exemplars, given as a pair---a bounding box and an associated binary label (positive or negative)---which can be used in isolation or to supplement the text prompt. The model then detects all the instances that match the prompt. For example, given a positive bounding box on a dog, the model will detect all dogs in the image. This is different from the PVS task in SAM 1 and 2, where a visual prompt yields only a single object instance. Each image exemplar is encoded separately by the exemplar encoder using an embedding for the position, an embedding for the label, and ROI-pooled visual features, then concatenated and processed by a small transformer. The resulting prompt is concatenated to the text prompt to comprise the prompt tokens. Image exemplars can be interactively provided based on errors in current detections to refine the output.

3.1.1.2 Tracker and Video Architecture

Given a video and a prompt , we use the detector and a tracker (see Figure 4) to detect and track objects corresponding to the prompt throughout the video. On each frame, the detector finds new objects and the tracker propagates masklets (spatial-temporal masks) from frames at the previous time to their new locations on the current frame at time . We use a matching function to associate propagated masklets with new object masks emerging in the current frame ,

Tracking an Object with SAM 2 Style Propagation

A masklet is initialized for every object detected on the first frame. Then, on each subsequent frame, the tracker module predicts the new masklet locations of those already-tracked objects based on their previous locations through a single-frame propagation step similar to the video object segmentation task in SAM 2. The tracker shares the same image/frame encoder (PE backbone) as the detector. After training the detector, we freeze PE and train the tracker as in SAM 2, including a prompt encoder, mask decoder, memory encoder, and a memory bank that encodes the object's appearance using features from the past frames and conditioning frames (frames where the object is first detected or user-prompted). The memory encoder is a transformer with self-attention across visual features on the current frame and cross-attention from the visual features to the spatial memory features in the memory bank. We describe details of our video approach in § C.3.

During inference, we only retain frames where the object is confidently present in the memory bank. The mask decoder is a two-way transformer between the encoder hidden states and the output tokens. To handle ambiguity, we predict three output masks for every tracked object on each frame along with their confidence, and select the most confident output as the predicted mask on the current frame.

Matching and Updating Based on Detections

After obtaining the tracked masks , we match them with the current frame detections through a simple IoU based matching function (§ C.3) and add them to on the current frame. We further spawn new masklets for all newly detected objects that are not matched. The merging might suffer from ambiguities, especially in crowded scenes. We address this with two temporal disambiguation strategies outlined next.

First, we use temporal information in the form of a masklet detection score (§ C.3) to measure how consistently a masklet is matched to a detection within a temporal window (based on the number of past frames where it was matched to a detection). If a masklet's detection score falls below a threshold, we suppress it. Second, we use the detector outputs to resolve specific failure modes of the tracker due to occlusions or distractors. We periodically re-prompt the tracker with high-confidence detection masks , replacing the tracker's own predictions . This ensures that the memory bank has recent and reliable references (other than the tracker's own predictions).

Instance Refinement with Visual Prompts

After obtaining the initial set of masks (or masklets), SAM 3 allows refining individual masks(lets) using positive and negative clicks. Specifically, given the user clicks, we apply the prompt encoder to encode them, and feed the encoded prompt into the mask decoder to predict an adjusted mask. In videos the mask is then propagated across the entire video to obtain a refined masklet.

3.1.1.3 Training Stages

We train SAM 3 in four stages that progressively add data and capabilities: 1) Perception Encoder (PE) pre-training, 2) detector pre-training, 3) detector fine-tuning, and 4) tracker training with a frozen backbone. See § C.4.1 for details.

4. Data Engine

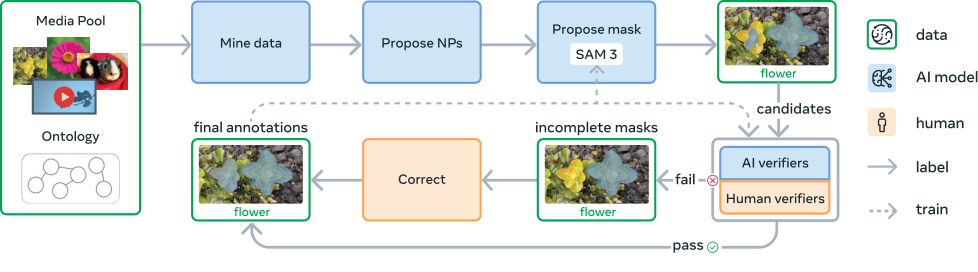

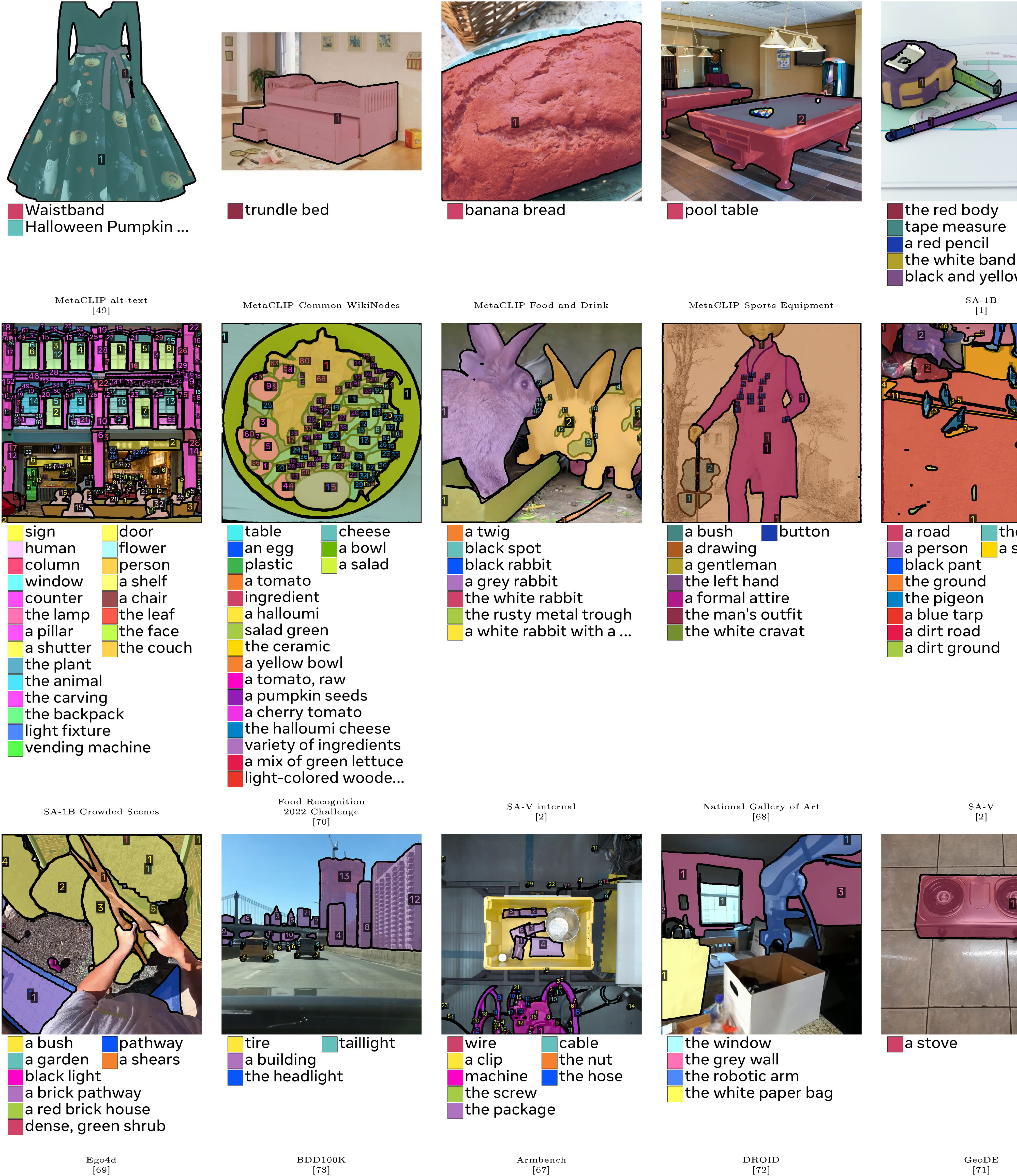

Achieving a step change in PCS with SAM 3 requires training on a large, diverse set of concepts and visual domains, beyond existing datasets (see Figure 12). We build an efficient data engine that iteratively generates annotated data via a feedback loop with SAM 3, human annotators, and AI annotators, actively mining media-phrase pairs on which the current version of SAM 3 fails to produce high-quality training data to further improve the model. By delegating certain tasks to AI annotators---models that match or surpass human accuracy---we more than double the throughput compared to a human-only annotation pipeline. We develop the data engine in four phases, with each phase increasing the use of AI models to steer human effort to the most challenging failure cases, alongside expanding visual domain coverage. Phases 1-3 focus only on images, with Phase 4 expanding to videos. We describe the key steps here; details and metrics are in § D.

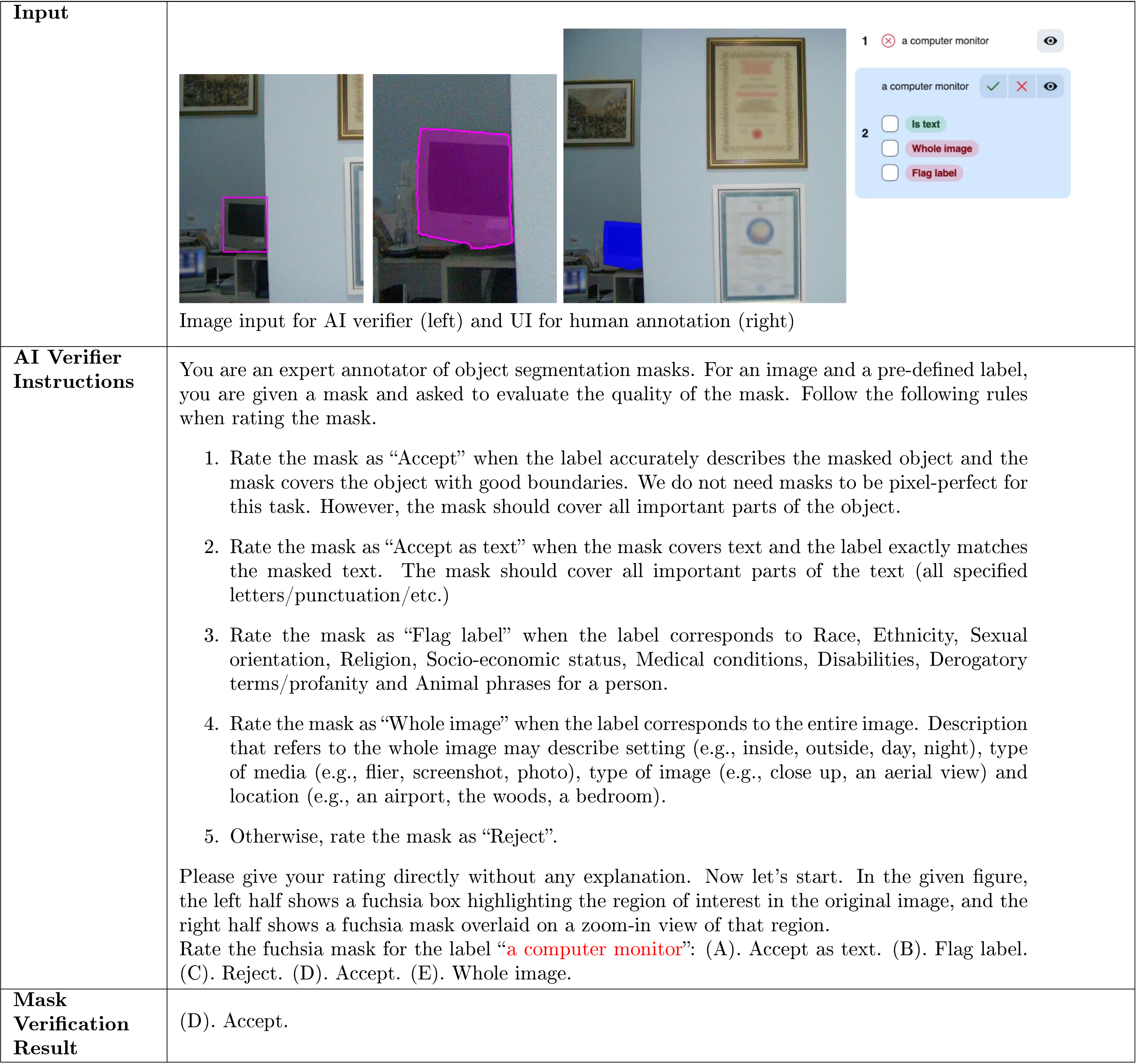

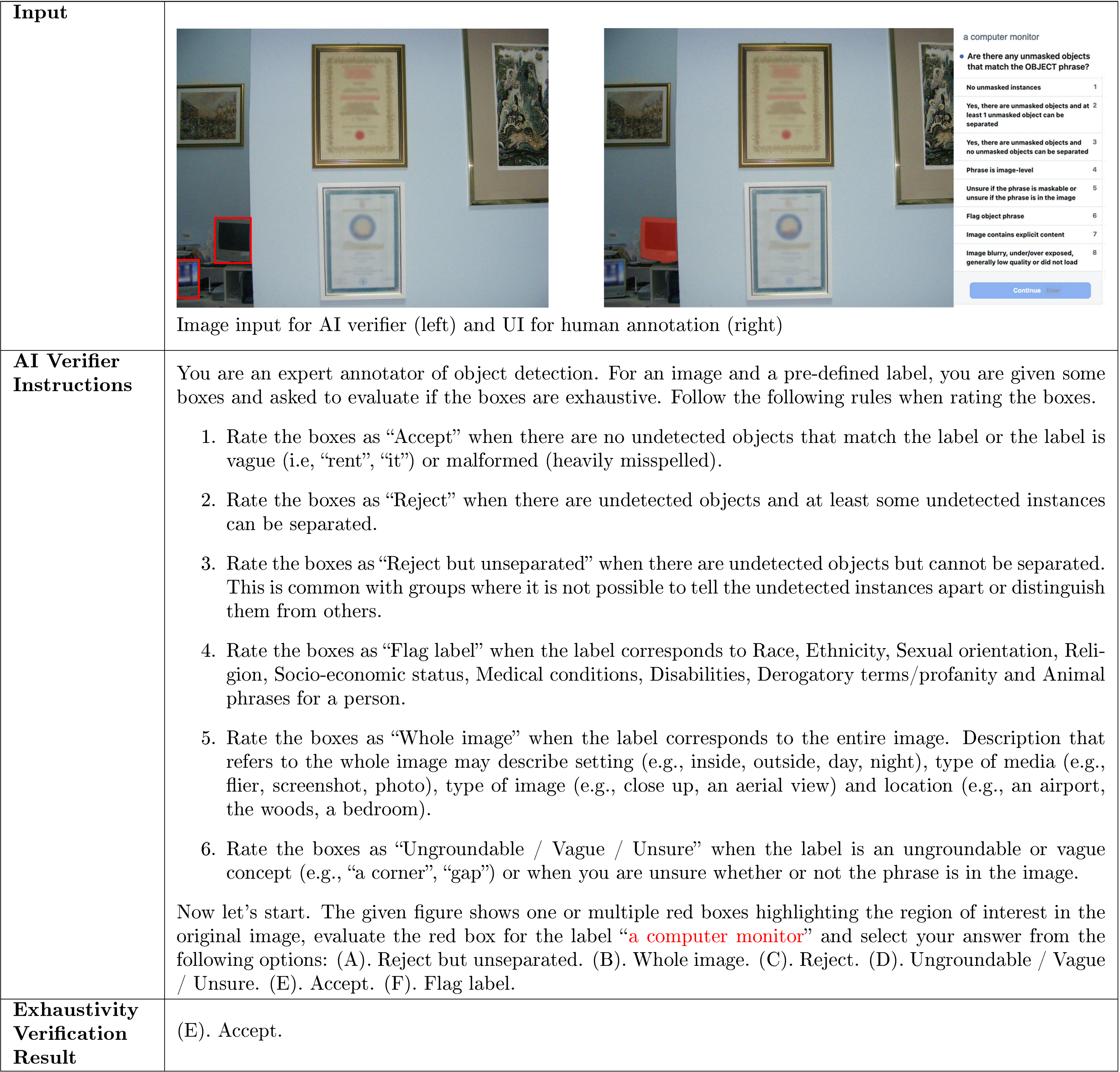

Data Engine Components (Figure 5)

Media inputs (image or video) are mined from a large pool with the help of a curated ontology. An AI model proposes noun phrases (NPs) describing visual concepts, followed by another model (e.g., SAM 3) that generates candidate instance masks for each proposed NP. The proposed masks are verified by a two-step process: first, in Mask Verification (MV) annotators accept or reject masks based on their quality and relevance to the NP. Second, in Exhaustivity Verification (EV) annotators check if all instances of the NP have been masked in the input. Any media-NP pairs that did not pass the exhaustivity check are sent to a manual correction stage, where humans add, remove or edit masks (using SAM 1 in a browser based tool), or use "group" masks for small, hard to separate objects. Annotators may reject ungroundable or ambiguous phrases.

Phase 1: Human Verification

We first randomly sample images and NP proposal with a simple captioner and parser. The initial mask proposal model is SAM 2 prompted with the output of an off-the-shelf open-vocabulary detector, and initial verifiers are human. In this phase, we collected 4.3M image-NP pairs as the initial {{SA-Co} /HQ} dataset. We train SAM 3 on this data and use it as the mask proposal model for the next phase.

Phase 2: Human + AI Verification

In this next phase, we use human accept/reject labels from the MV and EV tasks collected in Phase 1 to fine-tune Llama 3.2 ([13]) to create AI verifiers that automatically perform the MV and EV tasks. These models receive image-phrase-mask triplets and output multiple-choice ratings of mask quality or exhaustivity. This new auto-verification process allows our human effort to be focused on the most challenging cases. We continue to re-train SAM 3 on newly collected data and update it 6 times. As SAM 3 and AI verifiers improve, a higher proportion of labels are auto-generated, further accelerating data collection. The introduction of AI verifiers for MV and EV roughly doubles the data engine's throughput vs. human annotators. We refer to § A.4 for detailed analysis of how AI verifiers improve the data engine's throughput. We further upgrade the NP proposal step to a Llama-based pipeline that also proposes hard negative NPs adversarial to SAM 3. Phase 2 adds 122M image-NP pairs to {{SA-Co} /HQ}.

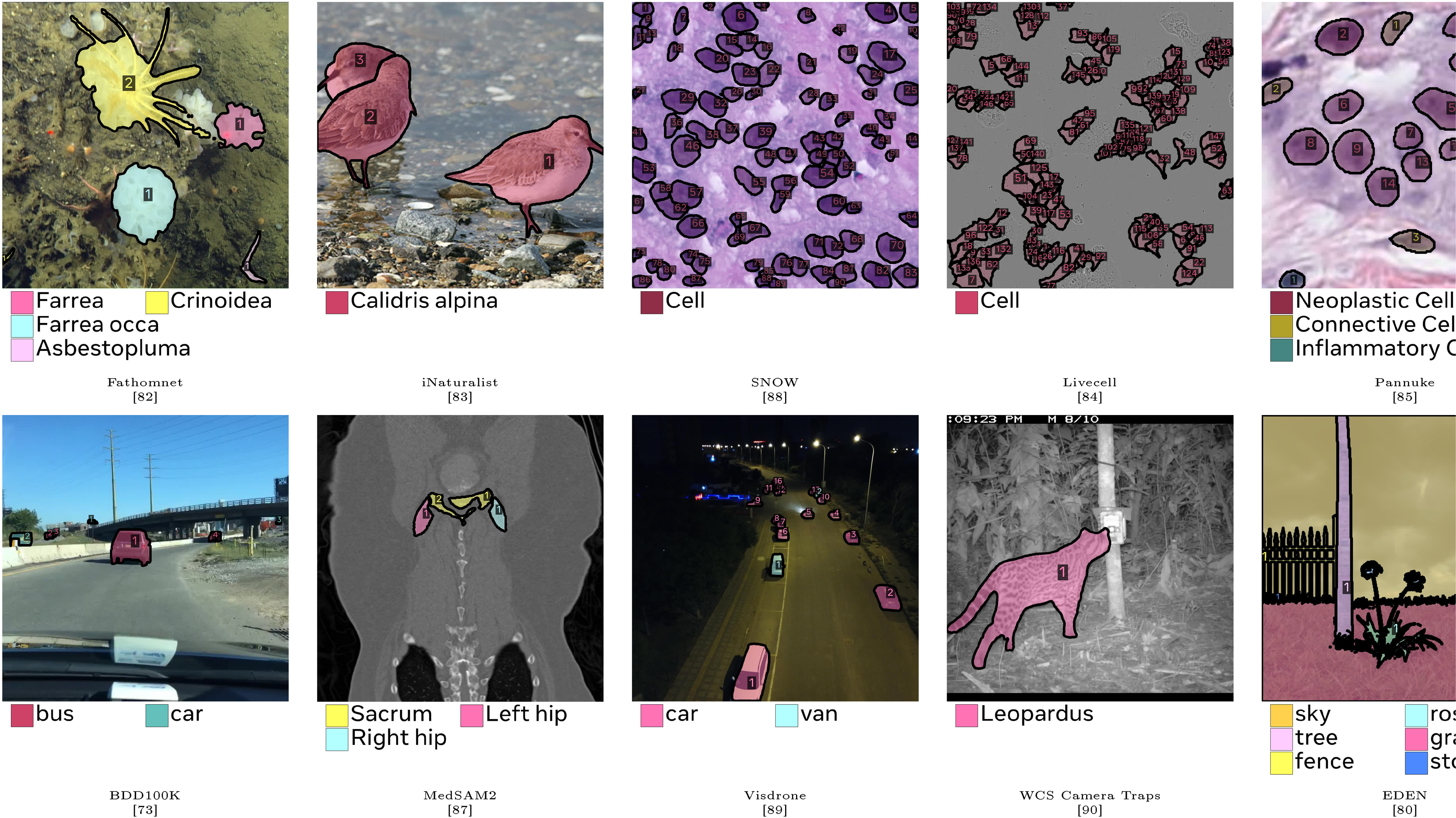

Phase 3: Scaling and Domain Expansion

In the third phase, we use AI models to mine increasingly challenging cases and broaden domain coverage in {{SA-Co} /HQ} to 15 datasets (Figure 15). A domain is a unique distribution of text and visual data. In new domains, the MV AI verifier performs well zero-shot, but the EV AI verifier needs to be improved with modest domain-specific human supervision. We also expand concept coverage to long-tail, fine-grained concepts by extracting NPs from the image alt-text where available and by mining concepts from a 22.4M node {SA-Co} ontology (§ D.2) based on Wikidata (17 top-level categories, 72 sub-categories). We iterate SAM 3 training 7 times and AI verifiers 3 times, and add 19.5M image-NP pairs to {{SA-Co} /HQ}.

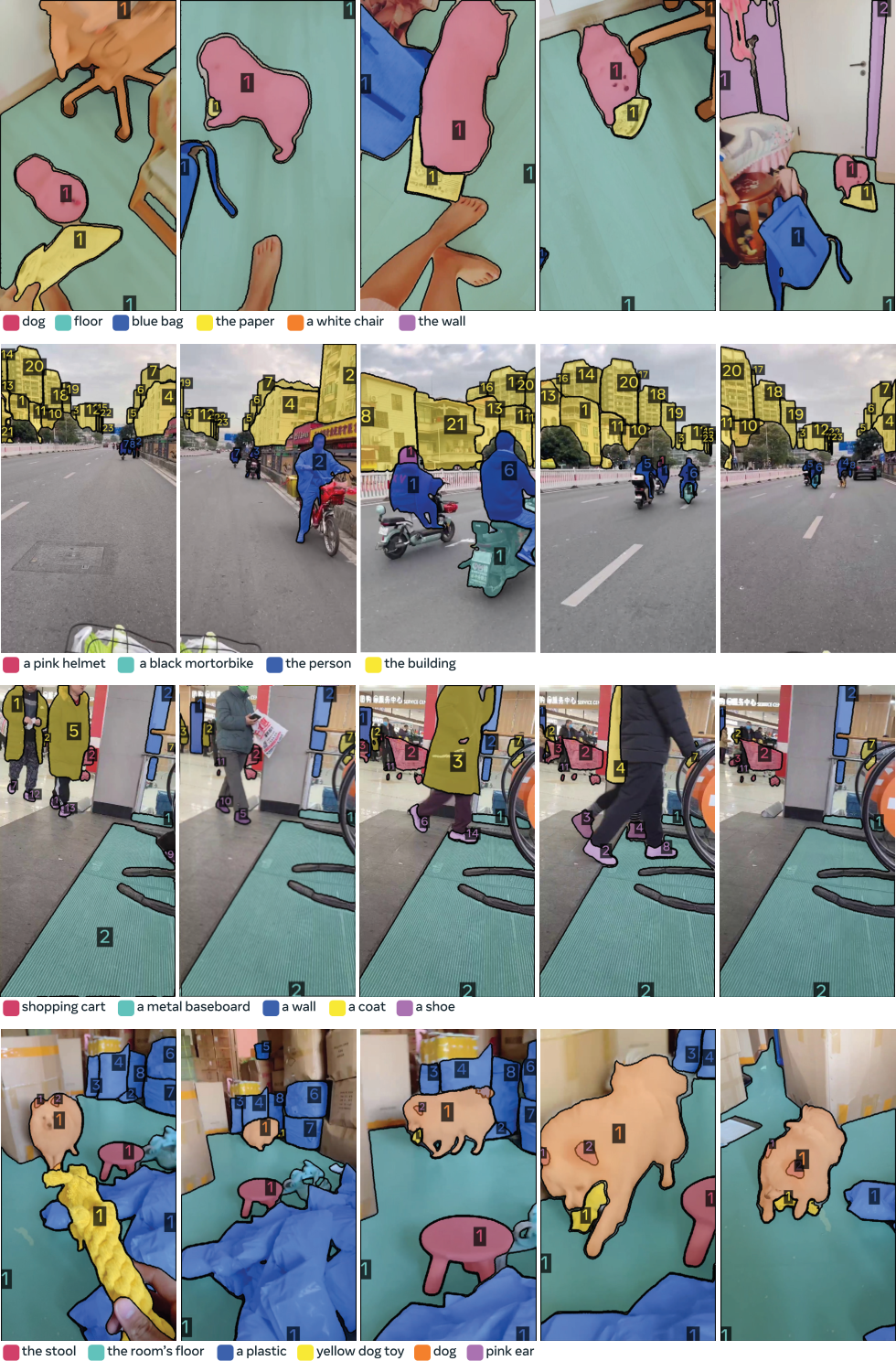

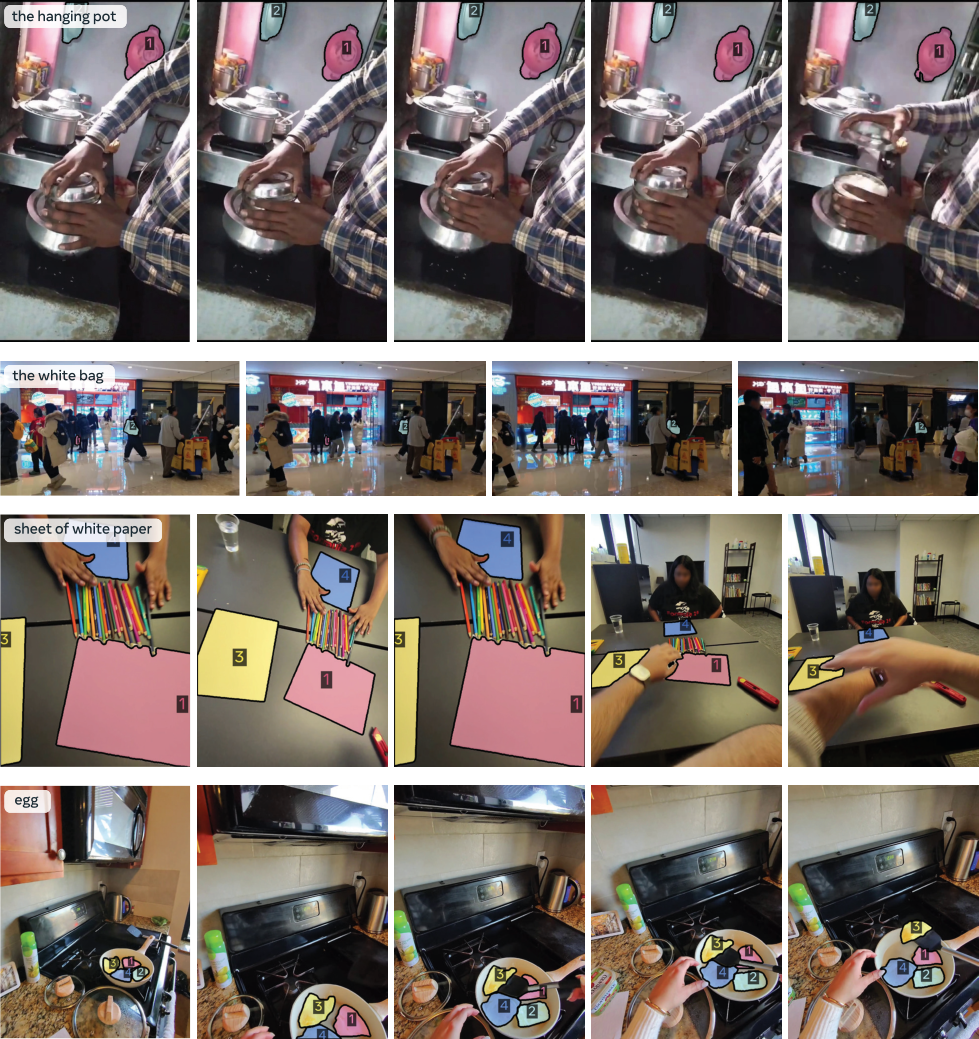

Phase 4: Video Annotation

This phase extends the data engine to video. We use a mature image SAM 3 to collect targeted quality annotations that capture video-specific challenges. The data mining pipeline applies scene/motion filters, content balancing, ranking, and targeted searches. Video frames are sampled (randomly or by object density) and sent to the image annotation flow (from phase 3). Masklets (spatio-temporal masks) are produced with SAM 3 (now extended to video) and post-processed via deduplication and removal of trivial masks. Because video annotation is more difficult, we concentrate humans on likely failures by favoring clips with many crowded objects and tracking failures. The collected video data {{SA-Co} /VIDEO} consists of 52.5K videos and 467K masklets. See § D.6 for details.

5. Segment Anything with Concepts ({SA-Co}) Dataset

Training Data

We collect three image datasets for the PCS task: (i) {{SA-Co} /HQ}, the high-quality image data collected from the data engine in phases 1-4, (ii) {{SA-Co} /SYN}, a synthetic dataset of images labeled by a mature data engine (phase 3) without human involvement, and (iii) {{SA-Co} /EXT}, 15 external datasets that have instance mask annotations, enriched with hard negatives using our ontology pipeline. Notably in the {{SA-Co} /HQ} dataset we annotate 5.2M images and 4M unique NPs, making it the largest high-quality open-vocab segmentation dataset. We also annotate a video dataset, {{SA-Co} /VIDEO}, containing 52.5K videos and 24.8K unique NPs, forming 134K video-NP pairs. The videos on average have 84.1 frames at 6 fps. See § E.1 for details including full statistics, comparison with existing datasets and the distribution of concepts.

SA-Co Benchmark

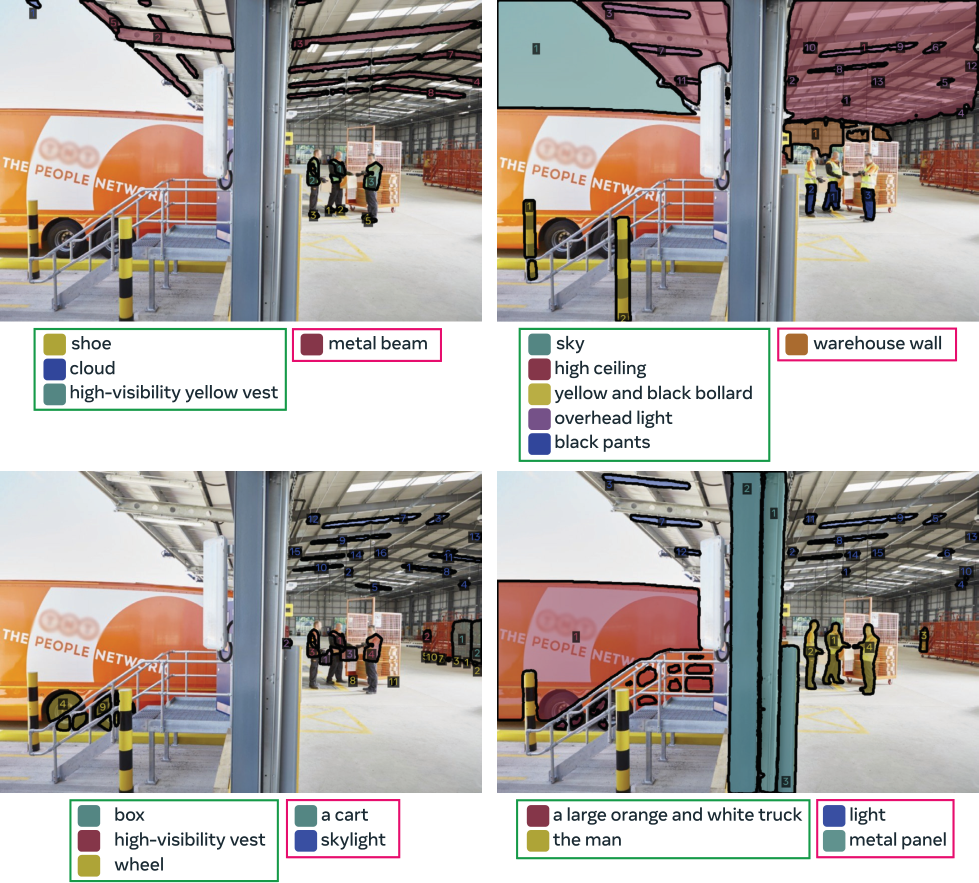

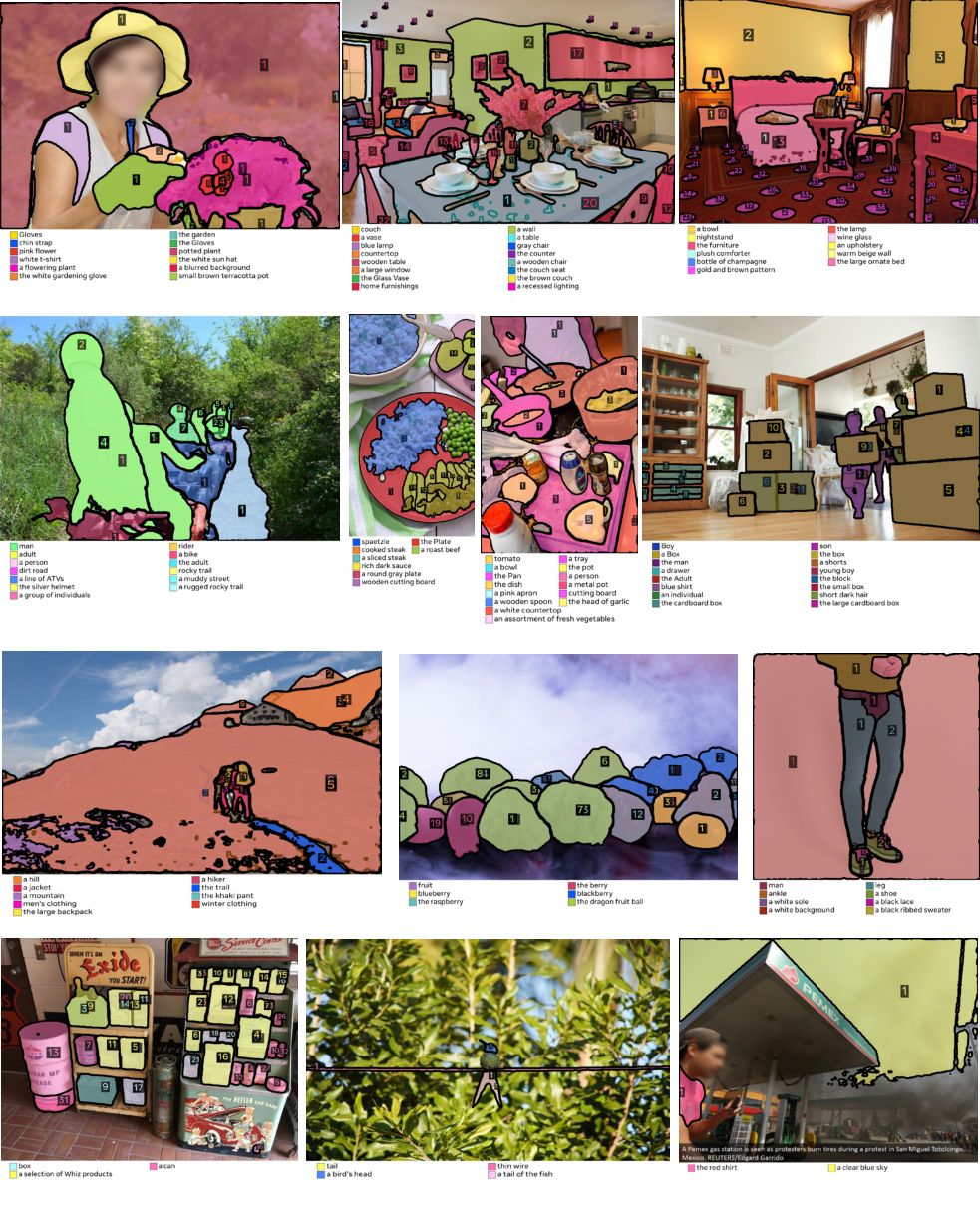

The SA-Co evaluation benchmark has 207K unique phrases, 121K images and videos, and over 3M media-phrase pairs with hard negative labels to test open-vocabulary recognition. It has 4 splits: {{SA-Co} /Gold} has seven domains and each image-NP pair is annotated by three different annotators (used to measure human performance); {{SA-Co} /Silver} has ten domains and only one human annotation per image-NP pair; {{SA-Co} /Bronze} and {{SA-Co} /Bio} are nine existing datasets either with existing mask annotations or masks generated by using boxes as prompts to SAM 2. The {{SA-Co} /VEval} benchmark has three domains and one annotator per video-NP pair. See Table 28 for dataset statistics and Figure 6 for example annotations.

Metrics

We aim to measure the usefulness of the model in downstream applications. Detection metrics such as average precision (AP) do not account for calibration, which means that models can be difficult to use in practice. To remedy this, we only evaluate predictions with confidence above 0.5, effectively introducing a threshold that mimics downstream usages and enforces good calibration. The PCS task can be naturally split into two sub-tasks, localization and classification. We evaluate localization using positive micro F1 () on positive media-phrase pairs with at least one ground-truth mask. Classification is measured with image-level Matthews Correlation Coefficient (IL_MCC) which ranges in and evaluates binary prediction at the image level ("is the object present?") without regard for mask quality. Our main metric, classification-gated F1 (cgF ), combines these as follows: . Full definitions are in § E.3.

Handling Ambiguity

We collect 3 annotations per NP on {{SA-Co} /Gold}. We measure oracle accuracy comparing each prediction to all ground truths and selecting the best score. See § E.3.

6. Experiments

We evaluate SAM 3 across image and video segmentation, few-shot adaptation to detection and counting benchmarks, and segmentation with complex language queries with SAM 3 + MLLM. We also show a subset of ablations, with more in § A. References, more results and details are in § F.

Image PCS with Text

We evaluate instance segmentation, box detection, and semantic segmentation on external and our benchmarks. SAM 3 is prompted with a single NP at a time, and predicts instance masks, bounding boxes, or semantic masks. As baselines, we evaluate OWLv2, GroundingDino (gDino), and LLMDet on box detection, and prompt SAM 1 with their boxes to evaluate segmentation. We also compare to APE, DINO-X, and Gemini 2.5 Flash, a generalist LLM. Table 1 shows that zero-shot, SAM 3 sets a new state-of-the-art on closed-vocabulary COCO, COCO-O and on LVIS boxes, and is significantly better on LVIS masks. On open-vocabulary {{SA-Co} /Gold} SAM 3 achieves more than double the cgF score of the strongest baseline OWLv2, and 74% of the estimated human performance. The improvements are even higher on the other SA-Co splits. Open vocabulary semantic segmentation results on ADE-847, PascalConcept-59, and Cityscapes show that SAM 3 outperforms APE, a strong specialist baseline. See § F.1 for details.

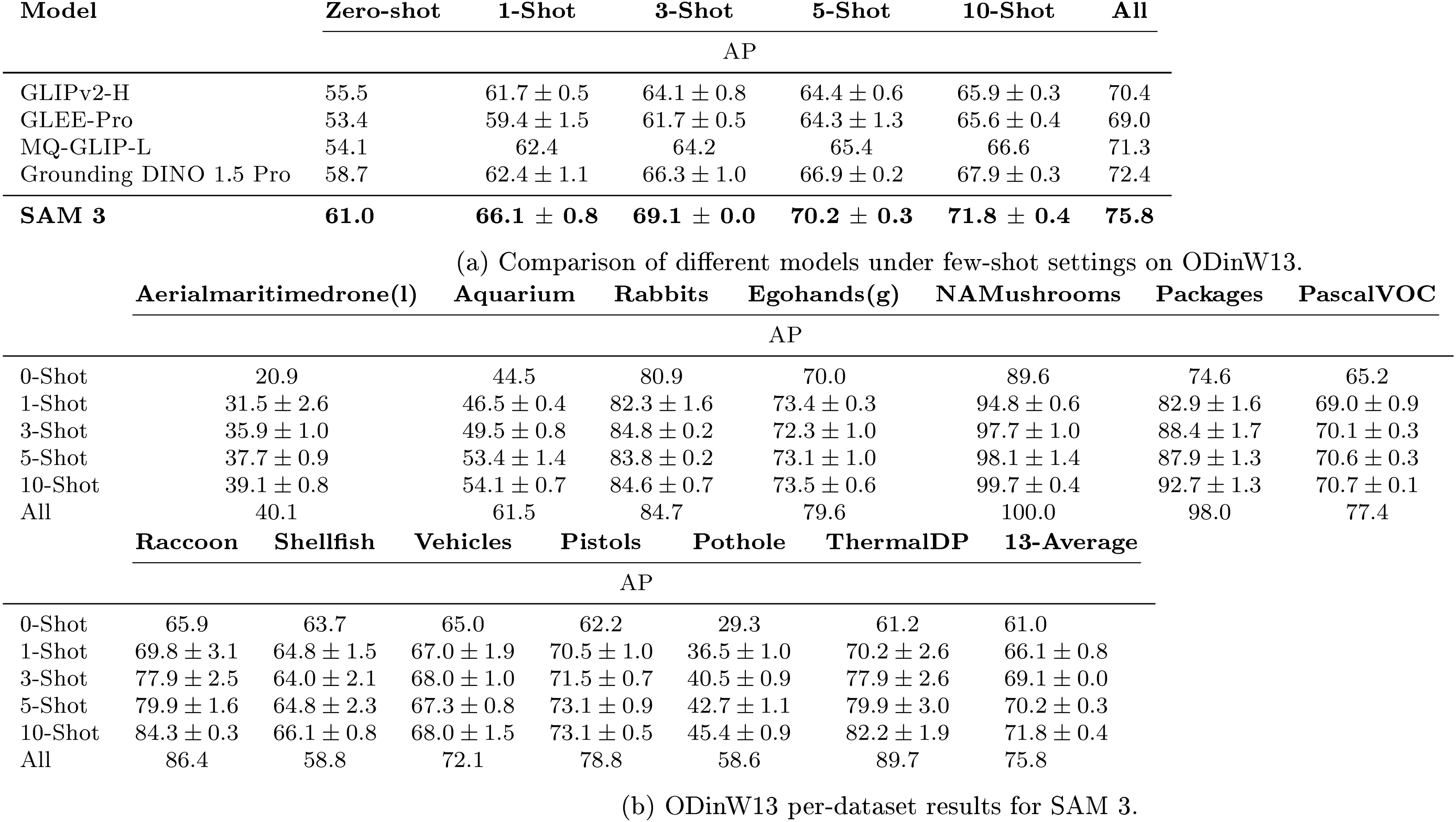

Few-Shot Adaptation

We evaluate zero- and few-shot transfer of SAM 3 on ODinW13 and RF100-VL, with their original labels as prompts. We do not perform any prompt tuning. We fine-tune SAM 3 without mask loss, and report average bbox mAP in Table 2. SAM 3 achieves state-of-the-art 10-shot performance, surpassing in-context prompting in Gemini and object detection experts (gDino); more details in § F.3. RF-100VL contains domains with specialized prompts that are out of SAM 3's current scope, but SAM 3 adapts through fine-tuning more efficiently than baselines.

PCS with 1 Exemplar

We first evaluate image exemplars using a single input box sampled at random from the ground truth. This can be done only on "positive" data, where each prompted object appears in the image. We report the corresponding in Table 3 across three settings: text prompt (T), exemplar image (I), and both text and image (T+I); SAM 3 outperforms prior state-of-the-art T-Rex2 by a healthy margin on COCO (+18.3), LVIS (+10.3), and ODinW (+20.5). See § F.2 for more details and results on {{SA-Co} /Gold}.

PCS with K Exemplars

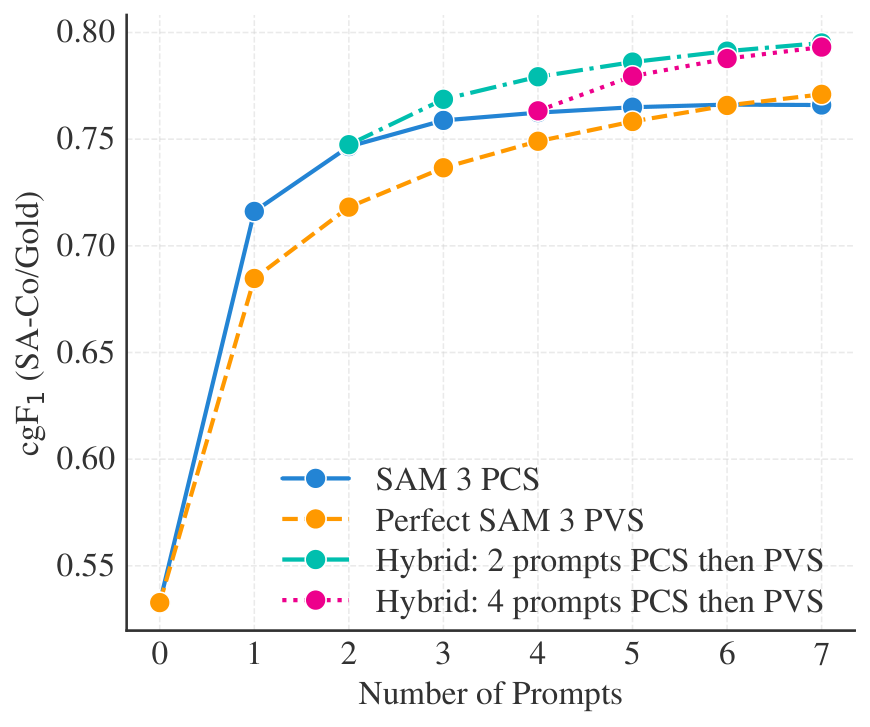

Next, we evaluate SAM 3 in an interactive setting, simulating collaboration with a human annotator. Starting with a text prompt, we iteratively add one exemplar prompt at a time: missed ground truths are candidate positive prompts, false positive detections are candidate negative prompts. Results (Figure 7) are compared to a perfect PVS baseline, where we simulate the user manually fixing errors using ideal box-to-mask corrections. SAM 3's PCS improves cgF more quickly, as it generalizes from exemplars (e.g., detecting or suppressing similar objects), while PVS only corrects individual instances. After 3 clicks, interactive PCS outperforms text-only by +21.6 cgF points and PVS refinement by +2.0. Performance plateaus after 4 clicks, as exemplars cannot fix poor-quality masks. Simulating a hybrid switch to PVS at this point yields gains, showing complementary.

Object Counting

We evaluate on object counting benchmarks CountBench and PixMo-Count to compare with several MLLMs using Accuracy (%) and Mean Absolute Error (MAE) from previous technical reports and our own evaluations. See Table 4 for results and § F.4 for more evaluation details. Compared to MLLMs, SAM 3 not only achieves good object counting accuracy, but also provides object segmentation that most MLLMs cannot provide.

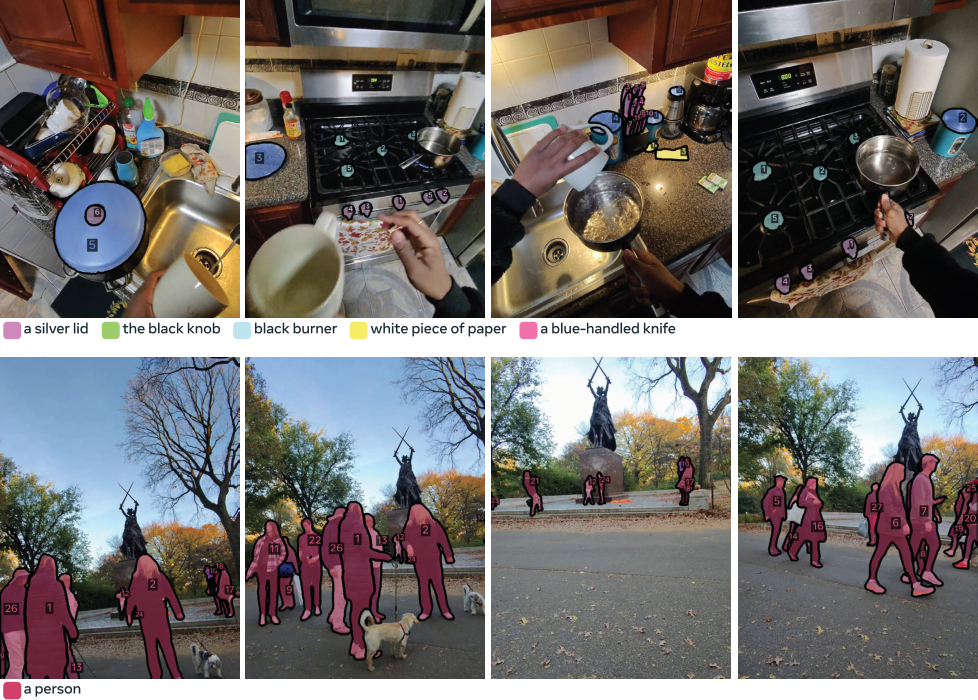

Video PCS with Text

We evaluate video segmentation with text prompts on both our {{SA-Co} /VEval} benchmark and existing public benchmarks. For {{SA-Co} /VEval}, we report cgF and pHOTA metrics (defined in § F.5) across its subsets (SA-V, YT-Temporal-1B, SmartGlasses). For public benchmarks, we use their official metrics. Baselines include GLEE, an open-vocabulary image and video segmentation model, "LLMDet + SAM 3 Tracker" (replacing our detector with LLMDet), and "SAM 3 Detector + T-by-D" (replacing our tracker with an association module based on the tracking-by-detection paradigm). In Table 5, SAM 3 largely outperforms these baselines, especially on benchmarks with a very large number of noun phrases. On {{SA-Co} /VEval} it reaches over 80% of human pHOTA. See § F.5 for more details.

PVS

We evaluate SAM 3 on a range of visual prompting tasks, including Video Object Segmentation (VOS) and interactive image segmentation. Table 6 compares SAM 3 to recent state-of-the-art methods on the VOS task. SAM 3 achieves significant improvements over SAM 2 on most benchmarks, particularly on the challenging MOSEv2 dataset, where SAM 3 outperforms prior work by 6.5 points. For the interactive image segmentation task, we evaluate SAM 3 on the 37 datasets benchmark introduced in [2]. As shown in Table 7, SAM 3 outperforms SAM 2 on average . See also § F.6 and Figure 21 for interactive video segmentation.

6.1.1.1 SAM 3 Agent

We experiment with an MLLM that uses SAM 3 as a tool to segment more complex text queries (see Figure 25). The MLLM proposes noun phrase queries to prompt SAM 3 and analyzes the returned masks, iterating until the masks are satisfactory. Table 8 shows that this " SAM 3 Agent" evaluated zero-shot on ReasonSeg and OmniLabel surpasses prior work without training on any referring expression segmentation or reasoning segmentation data. SAM 3 Agent also outperforms previous zero-shot results on RefCOCO+ and RefCOCOg. SAM 3 can be combined with various MLLMs, with the same set of the system prompts for all those MLLMs, showing SAM 3's robustness. See § G for more details.

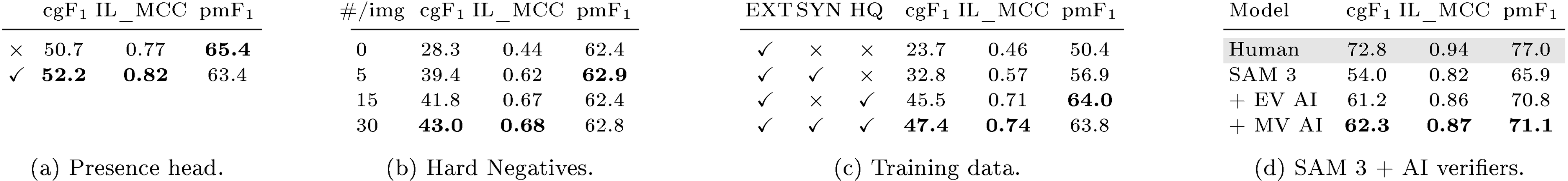

Selected Ablations

In Table 9 we report a subset of the more extensive ablations from § A. Note that the ablated models are from different, shorter training runs than the model evaluated above. The presence head boosts cgF by +1.5 (Table 9a), improving image-level recognition measured by IL_MCC by +0.05. Table 9b shows that adding hard negatives significantly improves the model performance, most notably the image-level IL_MCC from 0.44 to 0.68. Table 9c shows that synthetic (SYN) training data improves over the external (EXT) by +8.8 cgF and our high-quality (HQ) annotations add +14.6 cgF on top of this baseline. We present detailed data scaling laws of both types of data in § A.2, showing their effectiveness on both in-domain and out-of-domain test sets. In Table 9d, we show how AI verifiers can improve pseudo-labels. Replacing the presence score from SAM 3 with that score from the exhaustivity verification (EV) AI verifier boosts cgF by +7.2. Using the mask verification (MV) AI verifier to remove bad masks adds another 1.1 points. Overall, AI verifiers close half of the gap between SAM 3's and human performance.

Domain adaptation ablation

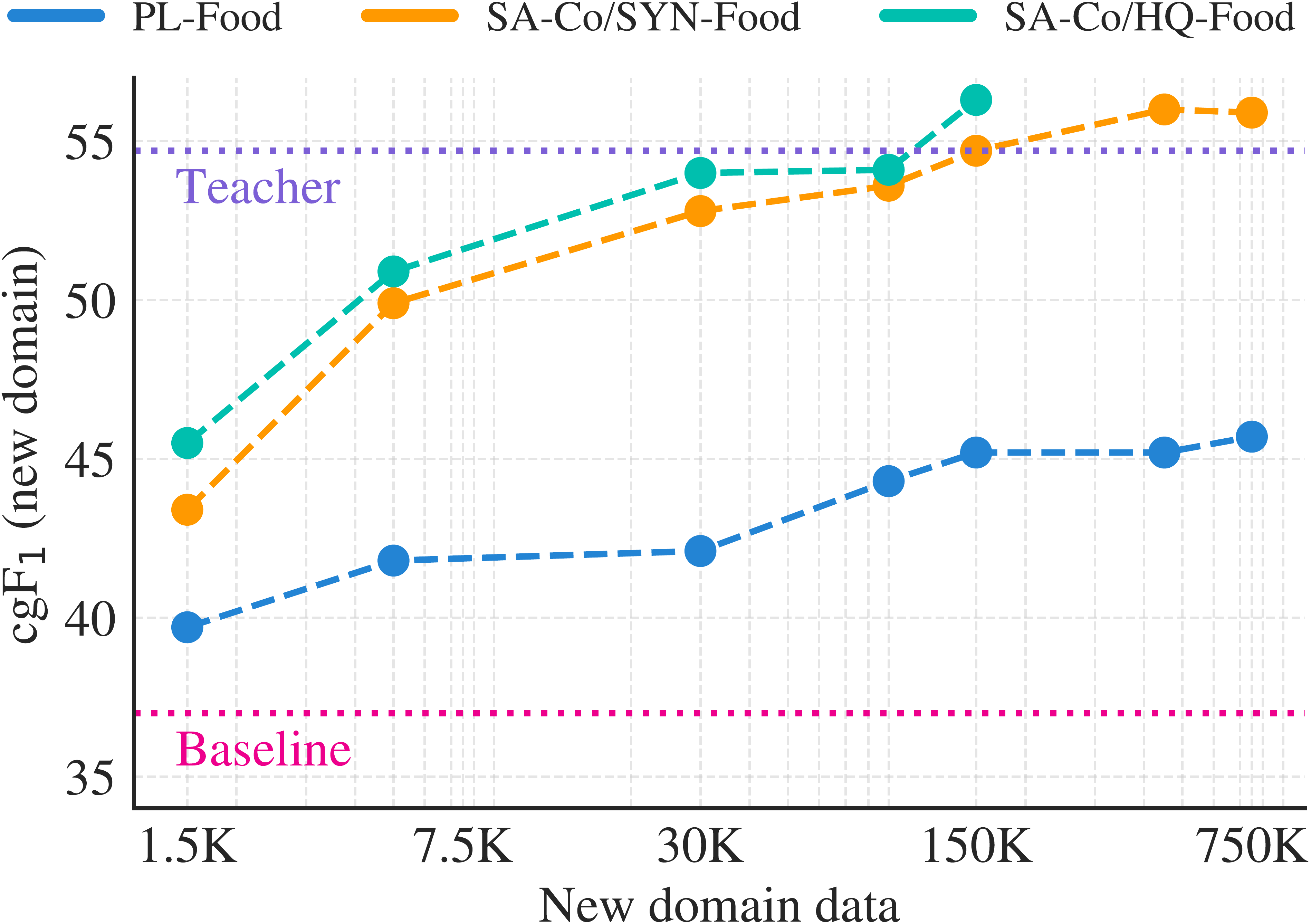

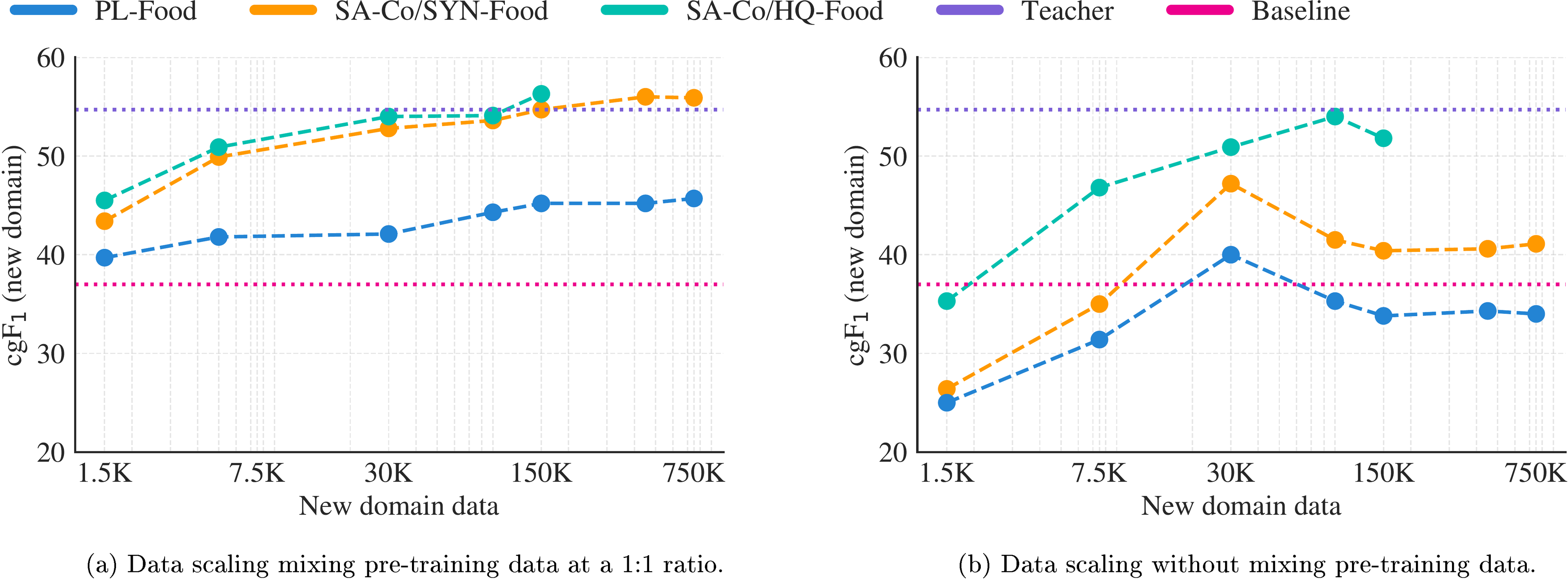

With domain-specific synthetic data generated by SAM 3 + AI verifiers, we show that one can significantly improve performance on a new domain without any human annotation. We hold out one of the SA-Co domains, "Food&drink", from training SAM 3 and AI verifiers. We then use three variants of training data for the novel "Food&drink" domain: high-quality AI+human annotations as in {{SA-Co} /HQ} (referred to as {{SA-Co} /HQ} -Food), synthetic annotations as in {{SA-Co} /SYN}, using AI but no humans ({{SA-Co} /SYN} -Food), and pseudo-labels generated before the AI verification step, i.e. skipping both AI verifiers and humans (PL-Food). Figure 8 plots performance on the "Food&drink" test set of the {{SA-Co} /Gold} benchmark as each type of training data is scaled up. We mix the domain specific data and high-quality general domain data at a 1:1 ratio. PL-Food provides some improvement compared to the baseline SAM 3 (zero-shot), but is far below the other variants due to its lower quality. HQ-Food and SYN-Food show similar scaling behavior, with SYN-Food slightly lower but eventually catching up, without incurring any human annotation cost. This points to a scalable way to improve performance on new data distributions. More details are in § A.3.

7. Related Work

Promptable and Interactive Visual Segmentation. SAM ([1]) introduces "promptable" image segmentation with interactive refinement. While the original task definition included text prompts, they were not fully developed. SAM 2 ([2]) extended the promptable visual segmentation task to video, allowing refinement points on any frame. SAM 3 inherits geometry-based segmentation while extending to include text and image exemplar prompts to segment all instances of a concept in images and videos.

Open-Vocabulary Detection and Segmentation in Images exhaustively labels every instance of an open-vocabulary object category with a coarse bounding box (detection) or a fine-grained pixel mask (segmentation). Recent open-vocabulary (OV) detection ([14,15]) and segmentation ([16,17]) methods leverage large-scale vision-language encoders such as CLIP ([18]) to handle categories described by arbitrary text, even those never seen during training. While DETR ([5]) is limited to a closed set of categories seen during training, MDETR ([7]) evolves the approach to condition on raw text queries. Image exemplars used as prompts to specify the desired object category (e.g., DINOv ([19]), T-Rex2 ([20])) present a practical alternative to text, but fall short in conveying the abstract concept of objects as effectively as text prompts. We introduce a new benchmark for OV segmentation with more unique concepts than prior work.

Visual Grounding localizes a language expression referring to a region of the image with a box or mask. ([21]) introduces phrase detection as both deciding whether the phrase is relevant to an image and localizing it. GLIP ([22]) and GroundingDino ([23]) formulate object detection as phrase grounding, unifying both tasks during training. MQ-GLIP ([24]) adds image exemplars to text as queries. Building on this trend toward models supporting multiple tasks and modalities, GLEE ([25]) allows text phrases, referring expressions, and visual prompts for category and instance grounding in both images and videos. Unlike SAM 3, GLEE does not support exemplars or interactive refinement. LISA ([26]) allows segmentation that requires reasoning, while OMG-LLaVa ([27]) and GLaMM ([28]) generate natural language responses interleaved with corresponding segmentation masks, with GLaMM accepting both textual and optional image prompts as input. Some general-purpose MLLMs can output boxes and masks (Gemini2.5 ([29])) or points (Molmo ([30])). SAM 3 can be used as a "vision tool" in combination with an MLLM (§ 6.1.1.1).

Multi-Object Tracking and Segmentation methods identify object instances in video and track them, associating each with a unique ID. In tracking-by-detection methods, detection is performed independently on each frame to produce boxes and confidence scores, followed by association of boxes using motion-based and appearance-based matching as in SORT ([31,32]), Tracktor ([33]), ByteTrack ([34]), SAM2MOT ([35]), or OC-SORT ([36]). An alternative is an end-to-end trainable architecture that jointly detects and associates objects, e.g., TrackFormer ([37]), TransTrack ([38]), or MOTR ([39]). TrackFormer uses a DETR-like encoder-decoder that initializes new tracks from static object queries and auto-regressively follows existing tracks with identity-preserving track queries. A challenge with joint models is the conflict between detection and tracking ([40,41]), where one needs to focus on semantics while the other on disentangling identities, even if their spatial locations overlap over time. SAM 3 is a strong image detector tightly integrated into a tracker to segment concepts in videos.

8. Conclusion

We present Segment Anything with Concepts, enabling open-vocabulary text and image exemplars as prompts in interactive segmentation. Our principal contributions are: (i) introducing the PCS task and SA-Co benchmark, (ii) an architecture that decouples recognition, localization and tracking and extends SAM 2 to solve concept segmentation while retaining visual segmentation capabilities, (iii) a high-quality, efficient data engine that leverages the complimentary strengths of human and AI annotators. SAM 3 achieves state-of-the-art results, doubling performance over prior systems for PCS on SA-Co in images and videos. That said, our model has several limitations. For example, it struggles to generalize to out-of-domain terms, which could be mitigated by automatic domain expansion but requires extra training. We discuss this and other limitations of our model in § B. We believe SAM 3 and the SA-Co benchmark will be important milestones and pave the way for future research and applications in computer vision.

9. Acknowledgements

We would like to thank the following people for their contributions to the SAM 3 project: Alex He, Alexander Kirillov, Alyssa Newcomb, Ana Paula Kirschner Mofarrej, Andrea Madotto, Andrew Westbury, Ashley Gabriel, Azita Shokpour, Ben Samples, Bernie Huang, Carleigh Wood, Ching-Feng Yeh, Christian Puhrsch, Claudette Ward, Daniel Bolya, Daniel Li, Facundo Figueroa, Fazila Vhora, George Orlin, Hanzi Mao, Helen Klein, Hu Xu, Ida Cheng, Jake Kinney, Jiale Zhi, Jo Sampaio, Joel Schlosser, Justin Johnson, Kai Brown, Karen Bergan, Karla Martucci, Kenny Lehmann, Maddie Mintz, Mallika Malhotra, Matt Ward, Michelle Chan, Michelle Restrepo, Miranda Hartley, Muhammad Maaz, Nisha Deo, Peter Park, Phillip Thomas, Raghu Nayani, Rene Martinez Doehner, Robbie Adkins, Ross Girshik, Sasha Mitts, Shashank Jain, Spencer Whitehead, Ty Toledano, Valentin Gabeur, Vincent Cho, Vivian Lee, William Ngan, Xuehai He, Yael Yungster, Ziqi Pang, Ziyi Dou, Zoe Quake. We also thank the IDEA team for granting us DINO-X and T-Rex2 access to benchmark them on the {{SA-Co} /Gold} dataset.

Appendix

A. Ablations

A.1 Model Ablations

Presence Token

We first ablate the impact of the presence token and the approach to its training. The presence token is included in the decoder (discussed further in § C.2), together with the object queries, and predicts a concept presence score. The presence score receives gradients only on the PCS task during joint training and is always supervised with the presence (or absence) of the concept in the image using a binary cross-entropy loss. Using a presence token to decouple presence and localization brings significant gains in performance, particularly on IL_MCC, see Table 9a.

When used with a presence score, we found that it is better for the box/mask object scores to not receive gradients when a concept is an image-level negative, see Setting (a) in Table 10. Note that this is in contrast to the approach in typical DETR variants, where all individual object scores are supervised negatively to reflect the absence of the concept in the image, see Setting (b) in Table 10. We find that (b) works worse than (a) when used with the presence score. When a concept is present in the image, individual object queries always receive classification supervision based on Hungarian matching. Setting (a) is consistent with our recognition-localization decoupled design, where the presence score is responsible for recognition (existence in the image) and the object scores are responsible for localization (i.e., rank the best match to the positive ground-truth highest among all the proposals).

During inference, we use the product of the global presence score and the object score as the total object score. In Setting (c), we explored directly supervising the total object scores (instead of the typical object scores) as positive or negative (as determined by matching); this setting can slightly improve the overall cgF , but is less flexible as the presence and object scores are jointly calibrated, e.g. such a model is less amenable to conditioning on a concept known to be present in the image. Finally, Setting (d) in Table 10 investigates detaching the presence score from the computation graph while supervising the total scores, but this does not improve over (c).

Training with presence can be considered as a form of post-training and occurs in Stage 3 (see § C.4.1) of our training pipeline. By default, ablations do not undergo this stage unless otherwise mentioned.

Vision and Text Encoder

While SAM 2 uses an MAE ([42]) pre-trained Hiera ([43]) vision encoder for its strong localization capability and efficiency for the more geometric PVS task, SAM 3 also needs strong semantic and linguistic understanding with broad coverage. We adapted PE ([4]) for the vision and text encoders of SAM 3, so that a large and diverse set of concepts is seen in Stage 1 of training, while producing aligned image and text encoders. In Table 11, we compare performance with Hiera and DINOv2 ([44]); since these vision encoders lack an aligned text encoder, we use DistilRoBERTa-base ([45]). We find PE to be the best overall choice of vision backbone, and using its own aligned text encoder provides further gains over PE with an unaligned text baseline. Use of PE enables strong robustness in SAM 3 (here measured by AP on COCO-O, demonstrating good object detection across various domain shifts, e.g. "sketch", "cartoon", "painting", etc).

Implementation Details. The image resolution is set to 1008 px, 1008 px, 1152 px for PE, DINOv2, Hiera, respectively, ensuring the same number of tokens in the detector due to their differences in patch size. All vision encoders used global attention in only a subset of the layers, using windowed ( tokens) attention otherwise. Since Hiera is a hierarchical multiscale encoder, we set the window size to in stage 3 of the encoder, which has most of the FLOPs. Since PE is capable of using relative positional information via RoPE ([46,47]), we include relative positional embeddings in global layers for Hiera and DINOv2 following [48]. All models are trained using {{SA-Co} /HQ} viewing 5 million samples over the course of training. Recipe is separately optimized for each choice of encoder. Tokens from the respective vision encoders are downsampled by to 1296 tokens before being passed to the fusion encoder and detector.

A.2 Image Training Data Ablations

Setup. We adopt a simplifed, lighter model and training strategy for ablations in this section. Specifically, we use (i) a stride-28 (instead of 14) variant of SAM 3 using 4 fewer tokens in the detector, (ii) limit to 45% of the entire {{SA-Co} /SYN} dataset and adopt, (iii) shorter training schedules and do not run "presence post-training" (see § A), (iv) evaluations are on an internal version of {{SA-Co} /Gold}, which has slightly lower human performance than the public version (cgF : internal 70.8 vs public 72.8). This allows running ablations more efficiently (but results in lower absolute accuracy vs. SAM 3). We observed similar trends when training at scale.

SAM 3 Training Data

Table 9c analyzes the impact of various SA-Co training data subsets. Training with even with just {{SA-Co} /EXT} shows comparable performance with best external models on {{SA-Co} /Gold} (see OWLv2's and DINO-X's performance in Table 1), indicating a strong base model. Adding synthetic data {{SA-Co} /SYN} into the training mix results in significantly improved performance. The performance further increases after adding the high-quality {{SA-Co} /HQ} data due to its quality and distributional similarity with {{SA-Co} /Gold}. Although {{SA-Co} /HQ} is large-scale and in-domain with {{SA-Co} /Gold}, {{SA-Co} /SYN} shows further gains on {{SA-Co} /Gold} when added on top of {{SA-Co} /HQ}.

{{SA-Co} /HQ} Scaling Law

Table 12 investigates scaling behavior of the {{SA-Co} /HQ} training data. For this ablation, the data mix is sampled randomly from the entire {{SA-Co} /HQ} (collected from the three phases in § 4) at a fixed percentage. We also report scaling behavior on two specific subsets of {{SA-Co} /Gold}: the MetaCLIP [49] subset annotated with generic caption-derived NPs, and Wiki-Food&Drink subset annotated with fine-grained NPs from SA-Co Ontology nodes. {{SA-Co} /HQ} improves performance on both subsets as expected, since they are from the same distribution (in-domain). We also report the Teacher (Human) performance in the last row. Due to the simplified setting, the gap between SAM 3 and Human is larger than that of the best SAM 3 model.

{{SA-Co} /SYN} Scaling Law

Table 13 shows that SAM 3 scales well with {{SA-Co} /SYN} data on {{SA-Co} /Gold} benchmark as it benefits from the large scale concepts captured from image captions generated by Llama4 and alt-text associated with the images, for both the in-domain MetaCLIP subset and the out-of-domain Wiki-Food&Drink subset within the {{SA-Co} /Gold} benchmark. The last row shows the Teacher performance (an older version of SAM 3 and AI verifiers) is much better than the student, and explains why {{SA-Co} /SYN} is useful. When comparing the {{SA-Co} /SYN} in Table 13 and {{SA-Co} /HQ} in Table 12, the lower in-domain performance gap on MetaCLIP (42.5 vs. 49.0) comes from the relatively weaker annotation quality of {{SA-Co} /SYN}, due to lacking of the human correction step. The gap is larger on the out-of-domain Wiki-Food&Drink set (37.4 vs. 59.9), because {{SA-Co} /SYN} only covers the MetaCLIP images and noun phrases from a captioning model; see Table 26. We also show in Figure 9 that with additional in-domain synthetic data, we can close the performance gap on {{SA-Co} /Gold} -Wiki-Food&Drink subset without any human involvement.

Hard Negatives

We ablate the number of hard negative noun phrases in {{SA-Co} /HQ} per image in Table 9b. We show that increasing the number of negatives improves SAM 3 performance across all metrics, most notably IL_MCC. Hard negatives are phrases that are not present in the image but that (a previous generation of) SAM 3 predicts masks for, i.e., they are adversarial to (a previous generation of) SAM 3. Training on such difficult distractors helps improve the image-level classification performance captured by the IL_MCC metric.

SAM 3 and AI Verifiers

AI verifiers improve performance over the final SAM 3 model alone on the PCS task, as shown in Table 9d, with per-domain results in Table 14. We first replace the presence score from SAM 3 with a presence score from the Exhaustivity Verification (EV) AI verifier (given the image and noun phrase with no objects as input, the probability of not exhaustive, defined in Table 22). This results in a +7.2 point gain in cgF , from both IL_MCC and pmF . The reason why EV presence score can even improve pmF is because it serves as a better calibration of object scores. Then we apply the Mask Verification (MV) AI verifier to each mask, and remove the rejected masks. This results in a further +1.1 point gain in cgF . The system closes nearly half the gap between SAM 3 and human performance, which indicates potential further improvements of SAM 3 by scaling up the {{SA-Co} /SYN} data and SAM 3 model size.

A.3 Automatic Domain Adaptation

With domain-specific synthetic data generated by SAM 3 + AI verifiers, we show that one can significantly improve performance on a new domain without any human annotation. We select "Food & drink" concepts with MetaCLIP images as the new domain. We generated three variants of synthetic training data on this "Food & drink" domain, while ensuring that no data from the new domain was used in training the AI annotators (including SAM 3 and AI verifiers):

- PL-Food: We select "Food&drink" Wiki nodes and mine images from MetaCLIP (refer to Concept Selection, Offline Concept Indexing and Online Mining steps in § D.4 for more details on data mining). For pseudo-annotating fine-grained "Food&drink" concepts, we use Wiki ontology to identify relevant coarse-grained concepts that SAM 3 works well on and prompt SAM 3 with them to generate masks. This data is similar to typical pseudo-labeled data used in prior work for detection self-training (e.g. [15]).

- {{SA-Co} /SYN} -Food: PL-Food is cleaned by AI verifiers: MV AI verifier to remove bad masks, and EV AI verifier to verify exhaustivity/negativity of (image, noun phrase) pairs, as the AI verification step in Figure 5.

- {{SA-Co} /HQ} -Food: PL-Food is cleaned by human verifiers for both MV and EV tasks. For non-exhaustive datapoints after EV, human annotators further manually correct them, as the "Correct" step in Figure 5.

We study the data scaling law of these three variants by evaluating their performance on the Wiki-Food&Drink subset of the {{SA-Co} /Gold} benchmark.

We train the models in 2 steps to isolate the impact of the data from the new domain from other data as well as to amortize training costs. We first pre-train a base model using " {{SA-Co} /HQ} minus {{SA-Co} /HQ} -Food" to establish base capability and a common starting point. Next, we fine-tune the same base model with the three data variants in two settings: with or without mixing the pre-training data.

Figure 9a shows the scaling law when mixing the synthetic data for the new domain with the pre-training data in a 1:1 ratio. We observe some improvement with PL-Food compared to baseline, but there is a large gap between the other variants due to its lower quality. {{SA-Co} /HQ} -Food and {{SA-Co} /SYN} -Food have similar data scaling behavior, with {{SA-Co} /SYN} -Food slightly lower but eventually catching up, without incurring any human annotation cost. The model trained on {{SA-Co} /SYN} -Food eventually surpassed the performance of its teacher system, thanks to the high quality pre-training data mixed during the fine-tuning.

Figure 9b shows the scaling law when fine-tuned with only synthetic data for the new domain. All three data variants result in poorer performance than that in Figure 9a. In this setting, there is a larger gap between {{SA-Co} /HQ} -Food and {{SA-Co} /SYN} -Food reflecting the lower quality of {{SA-Co} /SYN} -Food (mainly lack of exhaustivity due to no human correction). Comparing Figure 9a and Figure 9b, it is beneficial to include high-quality general-domain data when fine-tuning SAM 3 on new domains, particularly when using synthetic data.

A.4 Image Data Engine Annotation Speed

Table 15 measures the speedup in the SAM 3 data engine from adding AI verifiers when collecting data on a new domain with fine-grained concepts. We use the same setup as Figure 9, annotating Wiki-Food&Drink data generated with a data engine where neither SAM 3 nor AI verifiers have been trained on Wiki-Food&Drink data. We annotate the same set of image-NP pairs in four settings:

- Human (NP Input). A human annotator is given a single image noun-phrase pair from {{SA-Co} /HQ} -Food, and is required to manually annotate all instance masks. No mask proposals or AI-verifiers are used in the loop.

- Human (Mask Input). The same annotation task as "NP input" but in this setting, the human annotators starts with PL-Food, i.e., image noun-phrase pairs with mask proposals generated by SAM 3.

- Engine (All Human) Similar to Phase 1 in the SAM 3 data engine, humans start with PL-Food, and sequentially perform 3 tasks: Mask Verification, Exhaustivity Verification and Correction. All three tasks are performed by humans.

- Engine (Full) Similar to Phase 3 in the SAM 3 data engine, Mask Verification and Exhaustivity Verification tasks are completed by AI verifiers, and Correction is done by humans i.e human annotators in the manual annotation task start with {{SA-Co} /SYN} -Food.

Table 15 shows that a version of the SAM 3 model and AI verifiers that were never trained in this new domain double the throughput of the data engine. AI verifiers also allow verifying generated hard negative NPs at scale with close to no human-annotator involvement. As SAM 3 and AI verifiers are updated with the collected data and improve, human annotators need to manually correct fewer errors. This leads to increasingly higher throughput and the collection of more challenging data for a given amount of human annotation time.

In Table 23, we show that AI verifiers achieve a similar even better performance on the MV and EV tasks than human verifiers, so the quality of annotations from these four settings are similar.

A.5 Video Data Engine Annotation Speed

Using the same settings as described in Appendix A.4, we evaluate annotation speed in the video data engine by comparing Human (NP Input) and Engine (All Human) on positive video-NP pairs from {{SA-Co} /VEval} - SA-V. In contrast to the image data engine, we observe that starting with PL increases annotation time, but also improves exhaustivity by providing annotators with more visual cues and candidate masklets.

A.6 Video Training Data Ablations

We analyze how much the SAM 3 model benefits from the videos and annotations in {{SA-Co} /VIDEO} obtained through the video data engine, which are used in Stage 4 (video-level) training (described further in § C.4.1). Specifically, we train the model with a varying amount of masklets from {{SA-Co} /VIDEO} as VOS training data, and evaluate the resulting checkpoints on {{SA-Co} /VEval} under the VOS task with the metric. The results are shown in Table 17, where adding masklets collected with noun phrases through the video data engine (as additional Stage 4 training data) improves the performance on both {{SA-Co} /VEval} and public benchmarks such as DAVIS17 ([50]) and SA-V ([2]).

B. Limitations

SAM 3 shows strong performance on the PCS task in images and videos but has limitations in many scenarios.

SAM 3 struggles to generalize to fine-grained out-of-domain concepts (e.g., aircraft types, medical terms) in a zero-shot manner, especially in niche visual domains (e.g., thermal imagery). Concept generalization for PCS is inherently more challenging than the class-agnostic generalization to new visual domains for the PVS task, with the latter being the key that enables SAM and SAM 2 to be successfully applied zero-shot in diverse settings. Our experiments show that SAM 3 is able to quickly adapt to new concepts and visual domains when fine-tuned on small quantities of human-annotated data (Table 2). Further, we show that we can improve the performance in a new domain without any human involvement (Figure 9), using domain-specific synthetic data generated using our data engine.

From our formulation of the PCS task, SAM 3 is constrained to simple noun phrase prompts and does not support multi-attribute queries beyond one or two attributes or longer phrases including referring expressions. We show that when combined with an MLLM, SAM 3 is able to handle more complex phrases (§ 6.1.1.1 and § G).

In the video domain, SAM 3 tracks every object with a SAM 2 style masklet, which means the cost of SAM 3 inference scales linearly with the number of objects being tracked. To support real-time inference (30 FPS) on videos in practical applications (e.g., a web demo), we parallelize the inference over multiple GPUs: up to 10 objects on 2 H200s, up to 28 objects on 4 H200s, and up to 64 objects on 8 H200s. Further, under the current architecture, there is no shared object-level contextual information to aid in resolving ambiguities in multi-object tracking scenarios. Future developments could address this through shared global memory across multiple objects, which would also improve inference efficiency.

Supporting concept-level interactivity for PCS, alongside instance-level interactivity for PVS, poses several challenges. To support instance-level modifications without affecting all other instances of the concept, we enforce a hard "mode-switch" within the model from concept to instance mode. Future work could include more seamlessly interleaving concept and instance prompts.

C. Model Details

C.1 Model Architecture

Our architecture is broadly based on the SAM series ([2,1]) and DETR ([5]) and uses a (dual) encoder-decoder transformer architecture, see Figure 10 for an overview. SAM 3 is a generalization of SAM 2, supporting the new Promptable Concept Segmentation (PCS) task as well as the Promptable Visual Segmentation (PVS) task ([2]). The design supports multimodal prompts (e.g., text, boxes, points) and interactivity, in images and videos.

SAM 3 has 850M parameters, distributed as follows: 450M and 300M for the vision and text encoders ([4]), and 100M for the detector and tracker components. We next discuss the detector architecture for images followed by the tracker components built on top of it for video.

C.2 Image Implementation Details

The image detector is an encoder-decoder transformer architecture. We describe its details in this section.

Image and Text Encoders

The image and text encoders are Transformers ([51]) trained using constrastive vision language training using 5.4 billion image-text pairs following Perception Encoder (PE) ([4]), see § C.4.1 for training details. As in SAM 2, the vision encoder uses windowed attention ([43,52]) and global attention in only a small subset of layers (4 out of 32), where an image of 1008 pixels is divided into 3 3 non-overlapping windows of 336 pixels each. The vision encoder uses RoPE ([46,47]) in each layer and windowed absolute positional embeddings as in [48]. The text encoder is causal, with a maximum context length of 32.

As in [2], we use a streaming approach, ingesting new frames as they become available. We run the PE backbone only once per frame for the entire interaction, which can span multiple forward/backward propagation steps through a video. The backbone provides unconditioned tokens (features/embeddings) representing each frame to the dual-encoder consisting of the fusion encoder described below and memory attention for video.

Geometry and Exemplar Encoder

The geometry and exemplar encoder is primarily used to encode image exemplars (if present) for the PCS task. It is additionally used to encode visual prompts for the PVS task on images as an auxiliary functionality that is primarily used to include pre-training data for the PVS task in stages-2, -3 of training (see § C.4.1), to enable a more modular training approach.

Each individual image exemplar is encoded using positional embedding, label embedding (positive or negative) and ROI-pooled visual features that are concatenated (comprising "exemplar tokens") and processed by a small transformer. Visual prompts (points, boxes) for auxiliary training are encoded in a similar manner, comprising "geometry tokens". It is possible for neither "geometry tokens" nor "exemplar tokens" to be present (e.g. when only a text prompt is used). The geometry or exemplar tokens attend to each other via self-attention and also cross-attend to the frame-embeddings of the corresponding (unconditioned) frame from the image encoder.

Fusion Encoder

The text and geometry/exemplar tokens together constitute the prompt tokens. The fusion encoder accepts the unconditioned frame-embeddings and conditions on prompt tokens using a stack of 6 transformer blocks with self- and cross-attention (to prompt tokens) layers followed by an MLP. We use vanilla self-attention operations. The output of the fusion encoder are the conditioned frame-embeddings.

Decoder

The decoder architecture follows [5,7] as a starting point and is a stack of 6 transformer blocks. learned object queries (not to be confused with prompts) self-attend to each other and cross attend to the prompts tokens (made up of text and geometry/exemplar tokens) and conditioned frame-embeddings, followed by an MLP. We use box-to-pixel relative position bias ([9]) in the cross-attention layers attending to the conditioned frame-embeddings.

Following standard practice in stronger DETR variants, we use iterative box refinement ([8]), look-forward-twice ([53]) and hybrid matching ([54]) and Divide-And-Conquer (DAC) DETR ([10]). By default, we use object queries. Bounding boxes and scores are predicted using dedicated MLPs and accept the object queries as input.

Presence Head

Classifying each object in isolation is often difficult, due to insufficient information, and may require contextual information from the rest of the image. Forcing each object query to acquire such global awareness is however detrimental, and can conflict with the localization objectives that are by nature very local. To address this, we propose decomposing the classification problem into two complementary components: a global-level classification that determines object presence within the entire image, and a local-level localization that functions as foreground-background segmentation while preventing duplicate detections. Formally, we add the following structure: instead of predicting directly, we break it down as

To compute , we use a presence token, which is added to our decoder and then fed through an MLP classification head. Crucially, the presence score is shared by all object queries. The per-query classification loss is kept as usual, but to account for the decomposition, we only compute it when the NP is present in the image (see § A.1 for ablations on supervision strategy). The same decomposition is applied to the semantic segmentation head, where we reuse the same presence score, and train the binary mask head only on the positive examples.

Besides being more robust to false positives, decomposing the prediction in this manner is also more flexible, e.g. in typical counting tasks, we already know the NP is present in the image and instead want to know how many instances are present - in this case we can simply set . The presence token is concatenated with the object queries in all operations, but is excluded from DAC.

We also learn 4 geometric queries. Their function is similar to the 4 geometric queries in SAM 1 and 2 (where they were called "output tokens") and are used to perform the PVS on individual image or video frames during the stags-2, -3 of training, see § C.4.1. The prompts are provided by the "geometry tokens" in the form of visual prompts. The presence score is set to 1 when performing the PVS task on a single frame, as the target is known to be present in the frame.

Segmentation Head

The segmentation head is adapted from MaskFormer ([12]). Semantic segmentation and instance segmentation share the same segmentation head. The conditioned features from the fusion encoder are used to produce semantic segmentation masks, while instance segmentation additionally uses the decoder's output object queries. "Multi-scale" features are provided to the segmentation head using SimpleFPN ([52]), since the vision encoder is a (single-scale) ViT.

Handling Ambiguity

Experimentally, if we train a SAM 3 model without handling ambiguities as described in § 2 in any way, we observe that the model tends to predict several valid but conflicting interpretations of the phrase. This is expected; if in our training dataset a given phrase has two distinct interpretations, and roughly half the data is annotated assuming the first one, while the other half follows the second one, then the solution that minimizes the training loss is to output both interpretations with 50% confidence. However, this behavior is undesirable for end-users, because it produces conflicting, sometimes overlapping masks.

To address this issue, we add an ambiguity head to our model. Similar to SAM 1 and 2, this head is a mixture of experts, where we train in parallel experts, and only supervise the expert that gets the lowest loss (winner-takes-all). We find that performs the best and that it is more difficult to train experts due to mode collapse.

For a mixture of experts, each producing an output with loss , the mixture loss is a weighted average:

In our winner-takes-all variant, only the expert with the lowest loss receives gradient:

Backpropagating the loss only through the expert which received the minimal loss allows each expert to specialize to one kind of interpretation. This behavior is illustrated in Figure 11.

While this strategy allows experts to specialize, it does not explicitly select which expert should be used at inference time. To resolve this, we train a classification head that predicts the expert that has the highest probability of being correct. The classification head is trained in a supervised fashion with a cross entropy loss, by predicting which expert obtained the minimal loss during training. The Ambiguity head adjusts only the classification logits, leaving masks, boxes, and presence scores unchanged. We train it on top of a frozen SAM 3 model.

Finally, to detect overlapping instances, we compute the Intersection-over-Minimum (IoM) between masks. IoM is more effective than Intersection-over-Union (IoU) for identifying nested instances. With the ambiguity head, we obtain a 15% reduction in overlapping instances.

C.3 Video Implementation Details

The tracker architecture follows [2], which we briefly describe for convenience followed by a discussion of the disambiguation strategies we introduce.

Tracker

The image encoder is the shared PE ([4]) with the detector and provides unconditioned tokens to the memory attention using a separate neck. The memory attention receives these unconditioned PE tokens stacks self- and cross- attention layers that condition the current frame's tokens on spatial memories and corresponding object pointers in the memory bank. Memories are encoded by fusing a frame's mask prediction with the unconditioned PE tokens from the image encoder and placed in the memory bank.

As in [2], the decoder includes an occlusion head to indicate the likelihood of the object of interest being visible in the current frame. During inference, the occlusion score may also be used to select frames to place in the memory bank adaptively.

SAM introduced the ability to output multiple valid masks when faced with ambiguity about the object being segmented in an image. For example, when a person clicks on the tire of a bike, the model can interpret this click as referring to only the tire or the entire bike and output multiple predictions. In videos, this ambiguity can extend across video frames. For example, if in one frame only the tire is visible, a click on the tire might relate to just the tire, or as more of the bike becomes visible in subsequent frames, this click could have been intended for the entire bike. To handle this ambiguity, SAM 2 predicts multiple masks at each step of the video. If further prompts do not resolve the ambiguity, the model selects the mask with the highest predicted IoU for the current frame for further propagation in the video although other strategies are possible.

Disambiguation Strategy

As outlined in § 3.1.1.2, tracking in videos can suffer from ambiguities in mask propagation, false predictions from the detector, or limitations of IoU-based matching in crowded scenes with highly overlapping objects. In this section, we present the details of the temporal disambiguation strategies used to address these challenges. We begin by introducing the notation used throughout this section.

Let and denote the set of detector outputs and the set of tracker's predicted masks on frame respectively. We define a frame-wise matching function for a masklet on frame as

where is the predicted output mask of object on frame . In addition, we define a Masklet Detection Score (MDS) over an interval as This score measures how a masklet is consistently matched to a detection within a temporal window. The first frame in which object appears is denoted .

Track Confirmation Delay

To reduce spurious and duplicate masklets, we delay the output of the model slightly. Specifically, the output at frame is shown only after observing frame . This delay provides temporal context for validating candidate masklets before outputting their masks. By default, we use which achieves good accuracy at a slight delay cost of around half a second for frames per second videos. During the delay, we apply the following two criteria to remove unconfirmed or duplicate masklets as follows.

Removal of Unconfirmed Masklets

A candidate masklet is considered unconfirmed within the confirmation window if their MDS is below a threshold, , and the masklet first appears within the window . If both conditions are satisfied within the confirmation delay, we remove the masklet from the tracker's state. We choose , requiring that the masklet has to be matched to a detection for at least half of the frames within the confirmation delay period to be confirmed. This strategy helps reject some false positive detections and not track them.

Removal of Duplicate Masklets

If the tracker temporarily fails to predict a mask for an object in some frames, but the detector continues to detect the object during those frames, this can lead to the creation of a new masklet for the same object. As a result, two masklets may end up tracking the same object: the original (older) masklet, and a new masklet that is initiated during the period when the tracker missed the object. To resolve this issue, during the confirmation delay period, if two masklets consistently overlap with the same detection, we remove the one that started later. Specifically, two masklets , are considered duplicates on frame if there exists a detection such that IoU and . If the two masklets and are found to be duplicates for at least frames, we remove the one with the latest first appearance only if it first appeared within the confirmation window . Empirically, we find that using gives the best results.

Masklet Suppression

For confirmed masklets that were not removed during the confirmation delay, we apply an additional suppression step: if a masklet’s MDS over its entire lifetime falls below zero at any frame (i.e. ), we suppress its output by zeroing out its mask. However, we retain the masklet in the tracker’s state, allowing for the possibility that the object may be confirmed in future frames. This strategy primarily addresses ambiguous detections, such as objects entering the scene near the boundary. For example, if only a person’s hands are visible as they enter the frame, the detector may be unable to determine whether the object matches the text prompt (e.g., impossible to distinguish between a man and a woman). In such cases, if the detector subsequently fails to detect the object after it fully enters the scene, the masklet suppression criterion ensures that these masklets are suppressed, unless they are consistently matched with new detections.

Periodic Re-Prompting. In challenging scenarios involving occlusions or visually similar distractor objects, the tracker may lose track of the target object. To address such tracking failures, we periodically re-prompt the tracker using the latest detection outputs. Specifically, on every -th frame , we compare each detection with the tracker’s current predictions . If a detection has a high overlap with the tracker’s prediction (i.e., ) and both the detection score and the masklet prediction score exceed a confidence threshold of 0.8, we re-initialize the tracker for that object using the detection output mask. We observed that re-prompting is most effective on frames where objects are not occluded and are fully visible, which motivates our choice of high confidence thresholds. In our experiments, we set by default. This periodic re-prompting helps the tracker recover from temporary failures and maintain accurate object tracking throughout the video.

Detection-Guided Re-Prompting. In cases where the tracker’s predictions may drift and its predicted masks become leaky, we employ the detectors' outputs. For each frame , we compare every detection with the tracker’s current predictions . If the highest-matching detection has a low bounding box (i.e., ) with the corresponding tracker prediction , we recondition the tracker for that object using the latest detector output. This approach ensures that the tracker remains synchronized with reliable detection results.

The impact of these strategies is ablated in Table 39, and they show quantitative improvements across our evaluation sets.

C.4 Model Training

C.4.1 Training Stages

SAM 3 is trained in 4 stages, with each stage introducing new capabilities or refining existing capabilities. Table 18 lists the data used in each stage.

Stage 1

Perception Encoder (PE) pre-training ([4]), which pre-trains the image and text encoders with 5.4 billion image-text pairs. In addition to broad concept coverage, this stage is key for robustness (see § A.1). Since the vision encoder has to support multiple tasks (while also not being too large) we opt for an "L " size; The vision and text encoders are transformers with 450M and 300M parameters respectively. We largely follow [4], but do not use distillation and do not perform video fine-tuning in this stage.

Stage 2

This stage is for detector pre-training and trains the (image-level) detector as well as the vision and text encoders with large-scale image segmentation data (including video frames as images). This stage uses both psuedo-labelled and human-annotated data, see Table 18. The main goal of this stage is broad concept coverage of (image, noun phrase, masks) tuples. At the end of this stage, the model is able to do open-vocabulary object detection, instance and semantic segmentation across many domains fairly well.

An additional goal of this stage is to prepare the base model for tasks in subsequent stages. To prepare for the PCS task, (image, noun phrase) pairs are randomly () converted into visual queries (i.e. noun phrase is dropped) or augmented with input bounding boxes ().

Besides training for the PCS task, in this stage, the model is also pre-trained on the visually prompted PVS task. This is done by adding 4 decoder queries specific to this task following the design of SAM 1 & 2. Training data includes images (e.g., SA-1B) and videos frames (e.g, SA-V), see Table 18; the number of interactivity steps is restricted to 4 for efficiency. We largely follow the settings from [2], but use the Align loss ([11]) in lieu of the IoU prediction loss, co-opting the classification head for object queries for this task.

We train for 95k iterations with a batch size of 896 with 5k warm up and cooldown steps using AdamW ([55]). We apply layer-wise learning rate decay ([56]) of 0.9 to the vision encoder. We use a reciprocal square-root schedule ([57]) and weight decay of 0.1. We use an initial learning rate of 5 -4, 1 -4 for vision and text encoder and 1 -3 for all other components. For boxes, we use and gIoU losses with weights of 5 and 2. Classification loss uses a weight of 100 and focal and dice losses use weights of 200 and 10 respectively. The encoder and decoder use a dropout of 0.1.

Stage 3

This stage further trains the model with the highest-quality human annotated image segmentation data, expands the interactivity capabilities and introduces post-training to improve detection performance.

Specifically, in terms of interactivity, (a) in the PVS task, the number of interactivity steps is increased to 7 and (b) interactivity is introduced into the PCS task, where positive or negative exemplars are provided based on model error, as described next. We iteratively sample box prompts to mimic the real user policy. Positive boxes are sampled from false negative errors, and we prompt their corresponding ground-truth boxes. Negative boxes are sampled from high-confidence false positive predictions that do not have significant overlap with ground truths. At each iteration, the box inputs are added on top of the previous ones. If both a valid positive and negative box exist, we randomly select one; if no valid candidates are available, no additional prompt is given. The process is repeated for 5 iterations.

The expanded interactivity in the PCS and PVS in this stage significantly slows down training, but the extensive pretraining with limited interactivity for the PVS and no interactivity for PCS (but using image exemplars together with text prompts) prepares the model well to ensure that a short stage 3 is sufficient.

This stage retains only the highest quality, exhaustivity verified data (e.g., {{SA-Co} /SYN} is dropped) and introduces a presence token (and presence loss) to better model presence of target segments and their location location greatly increasing the precision of the model. The presence loss is a binary cross-entropy loss with weight of 20. All learning rates are lowered by a factor of 0.025. We train for 5k iterations with a batch size of 512, with other settings identical to stage 2.

Stage 4

For video, the tracker decoder is trained on top of the frozen backbone. Freezing the backbone at this stage is made possible by pre-training on VOS data in previous stages at the video frame level. This stage retains the strong spatial grounding of the previous stage and focuses on spatial-temporal tracking without degrading other capabilities. We use a batch size of 512, train for 190k iterations using a cosine schedule with a peak learning rate of and a linear warmup of 1k iterations. We supervise the model’s outputs using a weighted sum of losses: a linear combination of focal and dice losses for mask prediction, mean absolute error (MAE) loss for IoU prediction, and cross-entropy loss for object occlusion prediction, with respective weights of 20:1:1:1. For multi-mask predictions, we only apply supervision to the mask with the lowest segmentation loss. If a frame’s ground truth does not include a mask, we do not supervise any mask outputs for that frame; however, we always supervise the occlusion prediction head, which determines whether a mask should be present. As in [2], we further fine-tune the tracker with a longer temporal context using 16-frame and 32-frame videos for 60k iterations, while scaling the learning rate by a factor of 0.1.

C.4.2 Additional Training Settings

Data augmentation

For the PCS task, we apply the following transformations:

- Geometric: We use some cropping and resizing to vary the aspect ratios and help with small objects. The input resolution of our model is always a fixed square (usually ). During evaluation, the images are resized to this size, without respecting their aspect ratio. During training, we apply our augmentations, and pad if the resulting size is smaller than . We found it important to randomly distribute the padding on all sides, to avoid creating biases towards one particular region of the image. If the dataset does not contain notions of left and right, we also apply random horizontal flips.